Preface

About this Guide

HQbird User Guide contains detailed description of functions and features of HQbird — advanced Firebird distribution, including with configuration examples and best practices recommendations.

About IBSurgeon

IBSurgeon (https://www.ib-aid.com) was founded in 2002 with the idea to provide InterBase and Firebird developers and administrator with services and tools focused on databases safety, performance and availability. In Russia, IBSurgeon is mostly known as iBase.ru, famous by its Russian InterBase and Firebird portal www.ibase.ru. IBSurgeon is a member of Firebird Foundation and, as a member of Technical Task Group, has strong relationship with Firebird Project, with direct representatives in Firebird-Admins and in Firebird Foundation Committee. Today, IBSurgeon serves thousands of companies worldwide with emergency, optimization and maintenance tools and various services. Our clients are medical institutions, financial organizations and ISVs in Germany, Brazil, Russia and other countries, and all who have applications based on Firebird and/or InterBase. The flagship project of IBSurgeon is HQbird, the advanced distribution of FirebirdSQL for big databases with enterprise features.

1. Overview of HQbird

1.1. What is HQbird

HQbird is a distribution of the Firebird DBMS for enterprises from IBSurgeon Software (www.ib-aid.com[https://www.ib-aid.com]), which includes additional functions that mainly improve performance for large and highly loaded databases, and a set of tools for organizing a full cycle of database maintenance without a DBA (including tools for performance optimization, monitoring, local and cloud backups, and recovery in case of failures).

HQbird speeds up the performance of large databases (from 50 GB to 2 TB) and enables companies to manage large databases without requiring a dedicated database administrator for a few servers, or lowering support expenses for many (hundreds and thousands) Firebird servers. The minimal hardware requirements for HQbird are 8Gb RAM and 4 cores.

The first version of HQbird was released in 2015, the current version is HQbird 2024.

1.2. How is Firebird related to HQbird?

To put it simply, HQbird is an enterprise version of the open-source Firebird DBMS. In keeping with the tradition of open-source projects, we call Firebird the “vanilla” version: in the same way, there is a “vanilla” version of PostgreSQL and commercial versions of EnterpriseDB, PostgresPro, etc.

HQbird is not a “different” database in terms of compatibility with Firebird: there is no need to make a backup-restore when switching between HQbird and Firebird, no need to rewrite SQLs or change client applications.

Without any problems, you can install HQbird and the vanilla version of Firebird in parallel on the same server, work with the database file using HQbird, then switch to the vanilla Firebird, and vice versa.

HQbird’s 100% compatibility with vanilla Firebird is the most important feature of HQbird! Almost all functions that are developed for HQbird end up in Firebird within 1-2 versions: for example, replication appeared in HQbird for version 2.5, and it appeared in vanilla version 4.0, external connection pooling was developed in HQbird 3.0, and appeared in Firebird 4.0, multithreaded backup, restore, sweep capabilities appeared starting with HQbird 2.5, and became available in vanilla Firebird 5.0, etc.

In addition to the new functionality, bugs fixed in HQbird are also fixed in the corresponding versions of vanilla Firebird.

Also, IBSurgeon Software provides public testing of Firebird and HQbird in terms of reliability and performance: the testing results are published on the website www.firebirdtest.com.

1.3. What is the price of HQbird?

The permament license for 1 server is USD$899. It also includes 1 replica server license.

What will be the price of HQbird for a company that needs to use not 1 or 2 servers, but several hundred or even thousands of installations? If the number of servers is more than 20, purchasing permanent licenses (USD899/server) becomes too expensive. That’s why we offer HQbird Unlimited Subscription for software development companies.

HQbird Unlimited Subscription for software development companies costs USD$1200/month (or USD$13450/year with upfront payment), for the annual contract (please note, that regional pricing can be different).

This is a special license for software development companies that allows you to install and use an unlimited number of HQbird copies along with business applications (ERP, CRM, etc) produced by the company.

For example, if a company has 40 clients, then the subscription will cost USD$1200/month, that is, approximately $30 per client per month. If the software development company has 400 clients, then the cost for 1 client per month will be $3 per month.

1.4. What’s new in HQbird 2024

HQbird 2024 is a new major version that adds support for Firebird 5.0 and a number of important features:

-

Replacing queries "on the fly"

-

Improved Firebird Streaming technology:

-

Added control file, with the same format used for replication in Firebird 4 and higher

-

Added recovery of the

fb_streamingservice after a failure (replication segments are not deleted until the transactions started in them are completed or rolled back. After a failure, transactions that have not yet been committed are repeated). -

Added new Kafka CDC plugin (Change Data Capture)

-

In addition, HQbird 2024 still offers advanced replication features, external connection pooling, prepared statement pooling, and other features needed when working with large databases under high load.

1.5. Feature matrix

Below is a matrix of features and their level of support in the various versions of Firebird included with HQBird.

| # | Feature | V5.0 | V4.0 | V3.0 | V2.5 | Level |

|---|---|---|---|---|---|---|

1 |

High-performance native replication |

X |

X |

X |

X |

server |

2 |

Replacing queries “in the air” |

X |

X |

X |

X |

server |

3 |

Plugins to access ODBC/MySQL through Execute Statement On External |

X |

X |

X |

plugin |

|

4 |

Caching BLOBs in temp space |

X |

X |

X |

X |

server |

5 |

Improvements in optimizer for JOINs and large sortings |

X |

X |

X |

server |

|

6 |

Cache of compiled statements |

X |

X |

X |

server |

|

7 |

Streaming/Change Data Capture (plugins for Kafka, JSON, etc) |

X |

X |

plugin |

||

8 |

Full Text Search |

X |

X |

X |

plugin |

|

9 |

Multi-thread backup/restore/sweep, creation of indices |

X |

X |

X |

X |

server |

10 |

Parallel Reading of consistent data |

X |

X |

X |

X |

server |

11 |

Pool of External Connections |

X |

X |

X |

server |

|

12 |

Encryption |

X |

X |

X |

server |

|

13 |

Automatic correction of |

X |

X |

X |

X |

server |

14 |

Advanced Monitoring of Performance (trace, MON, locks, CPU, RAM, frequency) |

X |

X |

X |

X |

tools |

15 |

Monitoring of queries with large sortings |

X |

X |

X |

X |

server |

16 |

Manage (and configure replication) many databases at once with command-line tools |

X |

X |

X |

X |

tools |

17 |

Backups, Restore, and Automatic Backup/Restore |

X |

X |

X |

X |

tools |

18 |

Transfer backups, segments, etc through FTP/sockets/Amazon S3 |

X |

X |

X |

X |

tools |

19 |

Advanced database maintenance: proper garbage collection and more |

X |

X |

X |

X |

tools |

20 |

Multi-instance support |

X |

X |

X |

X |

tools |

21 |

Silent installation on Windows and Linux |

X |

X |

X |

X |

tools |

22 |

Tool to analyze database statistics |

X |

X |

X |

X |

tools |

23 |

Tool to analyze connections/transactions/memory consumption/IO operations |

X |

X |

X |

X |

tools |

24 |

Recovery tools |

X |

X |

X |

X |

tools |

25 |

Optimized configurations |

X |

X |

X |

X |

tools |

1.6. Brief Description of HQBird Features

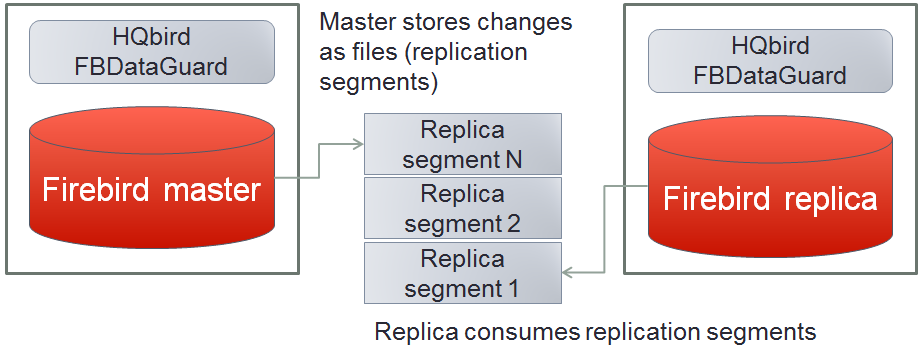

1.6.1. High-performance native replication

HQbird includes native replication to create fault-tolerant systems based on Firebird databases:

-

Replicates databases with 1500+ connections

-

Asynchronous replication with 1-30 seconds delay,

-

Synchronous replication without delay,

-

No triggers or other changes in schema required

-

Automatic propagation of DDL changes,

-

Online re-initialization of replicas.

-

Embedded transport for replication changes, verification of transferred replication segments

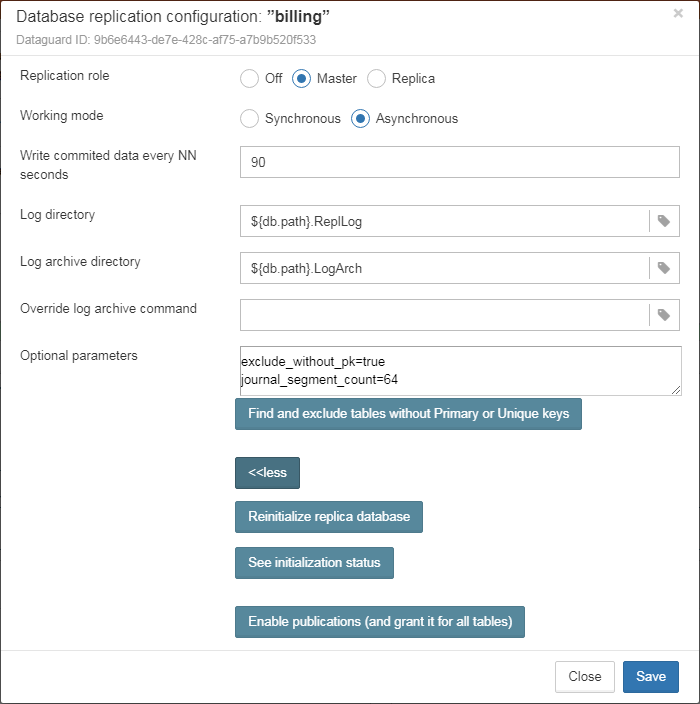

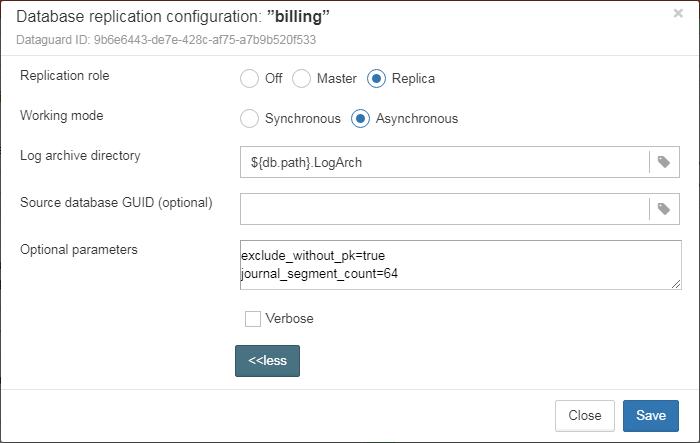

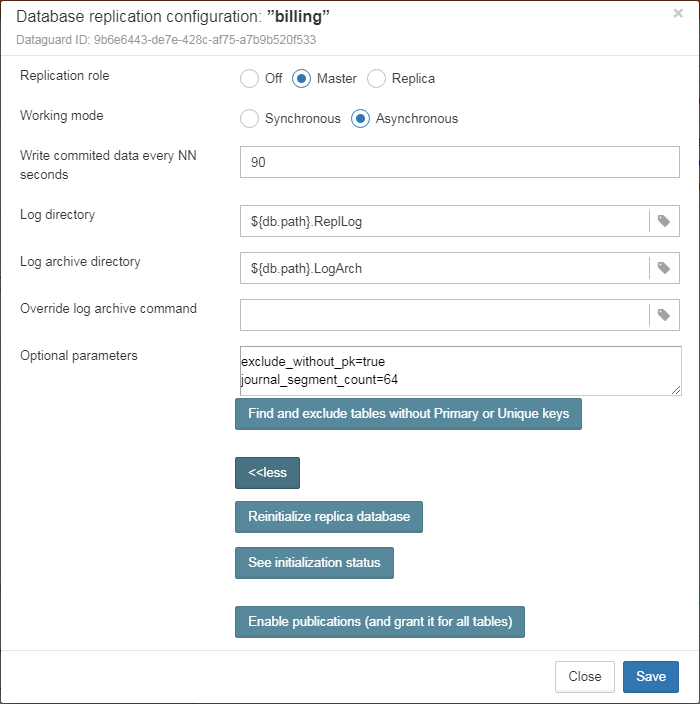

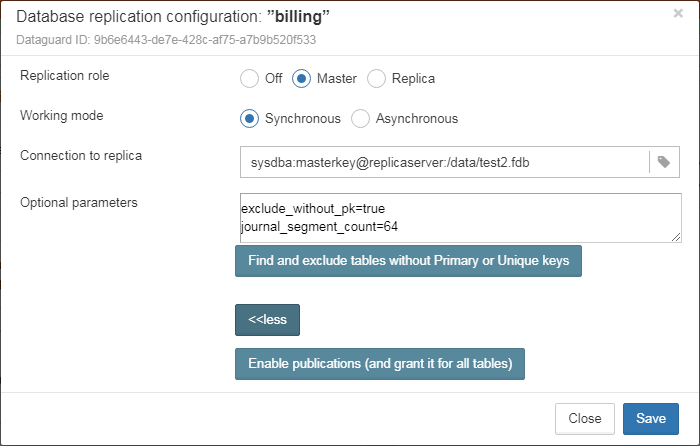

Native replication is configured through the special plugin, with the ability to exclude records without PK/UK at the plugin level.

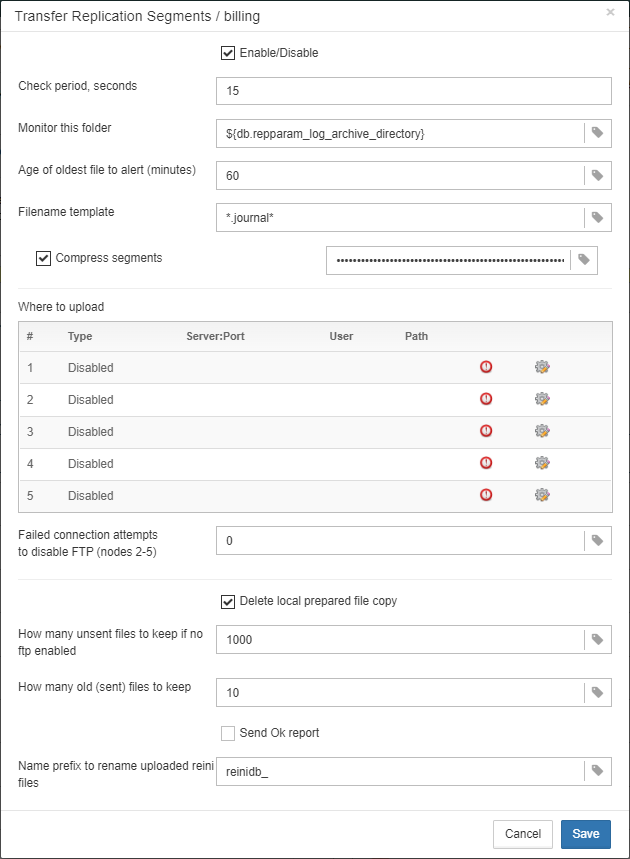

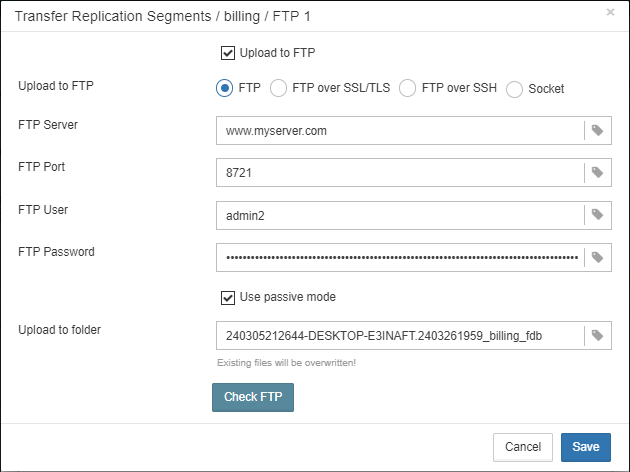

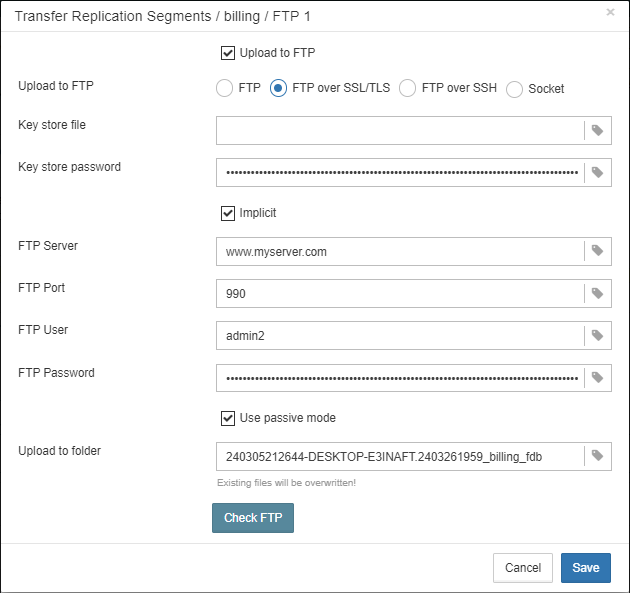

HQbird has complete transport to arrange transfer of segments for asynchronous replication for 1-to-1 or 1-to-many schemas, with automatic setup, transfer and validation of replication segments via sockets or FTP. HQbird has command-line commands to set up databases for replication in bulk, to choose databases in the folder, or in nested folders.

1.6.2. Replacing queries "on the fly"

If you have an application with inaccessible or missing sources, HQbird can help you change texts of incompatible or most resource-consuming SQL queries “on the fly”, and therefore help to optimize the performance or migrate an application without SQL queries sources. The replacement is easy configurable, it is implemented by pairs of files which contains text of original and replaced queries.

With Advanced Monitoring, you can find SQL queries that cause issues and then configure the substitution for them, even without access to the application’s source code. The replaced query will occur in trace and MON$ tables with the new text.

1.6.3. Plugins for performing external connections with MySQL and ODBC

HQbird has External Datasource plugins for ODBC and MySQL. Using these plugins, it is possible to execute commands EXECUTE STATEMENT ON EXTERNAL with

queries to MySQL or ODBC data source, in order to read data from external datasources, or to write data to external datasources.

Plugins support input parameters and correct mapping of data types (however, in case of ODBC it depends on the specific driver implementation).

See example of an external connection below:

execute block

returns (

emp_no bigint,

birth_date date,

first_name varchar(14),

last_name varchar(16),

gender char(1),

hire_date date

)

as

declare dsn_mysql varchar(128);

begin

dsn_mysql = ':mysql:host=localhost;port=3306;database=employees;user=root';

for

execute statement q'{

select

emp_no,

birth_date,

first_name,

last_name,

gender,

hire_date

from employees

order by birth_date desc limit 5

}'

on external dsn_mysql

as user null password 'sa'

into

emp_no, birth_date, first_name,

last_name, gender, hire_date

do

suspend;

end1.6.4. Caching blobs in temp space

HQbird can cache BLOBs in temp space, in order to speed up BLOBs operations (+15%-200% faster than in vanilla Firebird), and to prevent growth of the database file in case of mistaken BLOB operations.

HQbird uses an extra firebird.conf parameter BlobTempSpace to control this feature.

The caching option can be:

-

0 — disabled,

-

1 — enabled for PSQL (default),

-

2 — enabled for all blobs operations.

1.6.5. Improvements in optimizer for JOINs and large sortings

LeftJoinConversion / OuterLeftConversion

HQbird can automatically convert implicit inner joins to explicit ones for better optimization in versions 3 and 4.

To activate this feature, change the LeftJoinConversion setting in firebird.conf to true. HQbird in v5.0 supports the OuterLeftConversion option that is available in the vanilla version 5.0.

SortDataStorageThreshold / InlineSortThreshold

HQbird can optimize queries that involve large sorting operations. In versions 2.5 and 3.0, you can use the SortDataStorageThreshold setting to activate the Refetch plan for this purpose.

In the vanilla version 4.0, this setting is renamed as InlineSortThreshold. Usually, we recommend to set SortDataStorageThreshold to 8192 or 16384 bytes.

1.6.6. Cache of compiled queries

This feature can improve the performance of repeated queries, especially when using a connection pool (PHP, etc).

Cache keeps a certain number of prepared queries in each connection’s memory. HQbird has this cache in versions 3.0 and 4.0, and you can adjust it with the DSQLCacheSize setting (default is 0, i.e., disabled).

In vanilla version 5.0, there is a comparable feature, regulated by the MaxCompiledCache option, which is measured in Megabytes, the default is 2Mb.

1.6.7. Streaming/Change Data Capture (plugins for Kafka, JSON, etc)

Firebird Streaming is a technology that tracks changes in the database and sends them to another system, such as Kafka, JSON files, RabbitMQ, full text search plugin, etc.

HQbird offers a replication-based Change Data Capture plugin. The plugin creates a change flow that reflects transaction commits/rollbacks.

HQbird provides ready-made plugins for Kafka, RabbitMQ, JSON files, and also supports their configuration for any destination. CDC is useful for processing queues, sending alerts asynchronously, and copying changes to other systems (such as business intelligence or data science pipelines).

CDC plugin available upon request. For more information, contact IBSurgeon support ([email protected]).

See more Firebird Streaming

1.6.8. Full-text search

Full-text search is a technique that allows you to search for any word or phrase within a large collection of documents or data. Full-text search is different from searching based on metadata or partial text, which may not capture the full meaning or context of the query. Full-text search uses a full-text engine, such as Lucene, to perform the search and return the results.

IBSurgeon Full Text Search UDR is a user-defined routine (UDR) that integrates Lucene with Firebird. A UDR is a custom function that can be called from SQL statements. IBSurgeon Full Text Search UDR allows you to perform full-text search on Firebird tables in varchar and BLOB fields using Lucene engine.

This UDR is available in open source, but HQbird, provides a customizable plugin based on streaming for operational update.

More details: https://www.firebirdsql.org/en/full-text-search-udr/

1.6.9. Multi-threaded backup, restore, sweep, creation of indices

HQbird implements multi-thread maintenance (sweep), backup, restore, and create index operations. Firebird 2.5, 3.0 and 4.0 are supported, and this functionality also appeared in Firebird vanilla version 5.0.

The format of backup files is the same as in the vanilla Firebird. On the test server with CPU with 8 cores and SSD, we have the following results (compared with 1 thread);

-

Backup — 4-6x times faster

-

Restore — 2-4x time faster on CPUs with 8 cores and SSD

-

Sweep — 4-6x time faster

The actual acceleration depends on CPU, disk subsystem of the server, and structure of the database. Install HQbird in the trial mode (up to 30 days) and check what results will be on your server!

More details and test results can be found here: https://ib-aid.com/articles/firebird-gbak-backuptips-and-tricks#110hqbirdbackup

1.6.10. Parallel reading of consistent data

HQbird, starting from version 2.5, supports two important features:

-

make_dbkey()function, which enables reading a table that is partitioned by physical storage blocks (from pointer pages), -

and “shared snapshot” transaction mode, which facilitates parallel operations in multiple connections.

These features help to achieve parallel reading of large data sets, and to accelerate 2-10x times export operations (such as for BI exports or data pipeline). These features are also available in Firebird vanilla, from version 4.0.4 onwards.

-

More details are in the article: https://ib-aid.com/articles/parallel-reading-of-data-in-firebird

-

Example application & sources: https://github.com/IBSurgeon/FBCSVExport

1.6.11. Pool of external connections

HQbird has a pool of external connections for Firebird 2.5, 3.0, and this pool is also available in vanilla version since 4.0.

An external connection pool allows you to execute EXECUTE STATEMENT ON EXTERNAL statements with less overhead in reconnecting to the external database.

The feature is controlled in the firebird.conf with ExtConnPoolSize and ExtConnPoolLifeTime parameters.

From the application perspective, no extra steps are needed to use or not use — it is switched on or off in the server configuration, and completely transparent for the applications. It is also possible to disable garbage collection for queries executed in external connections. It is regulated through configuration parameter ExtConnNoGarbageCollect.

See details: Pool of external connections

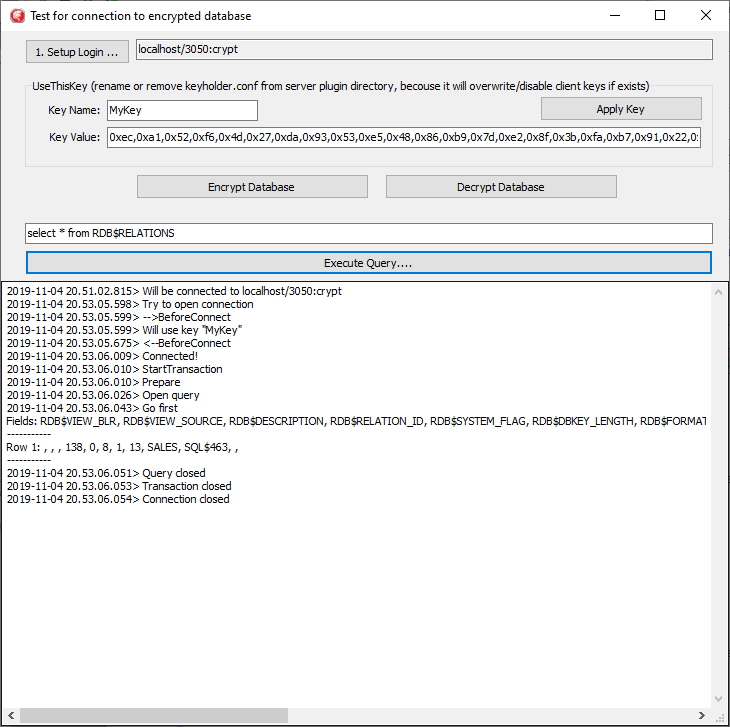

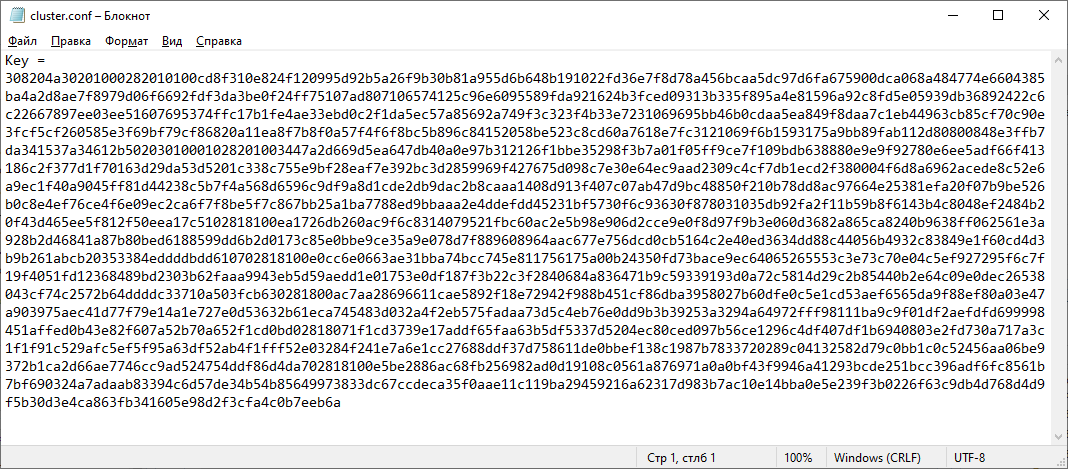

1.6.12. Encryption

HQbird supports encryption with Encryption Framework’s Plugin. The main features are:

-

DB encryption plugin (available on demand) for versions 3, 4, 5, Windows & Linux. Comprehensive and fast encryption plugin framework, with AES256. Performance loss is between 4%-20%, depending on the RAM and configuration.

-

Support for multi-thread work (for middleware applications, with connections to multiple databases).

-

Sending keys through

fbclient.dllto implement encryption without changing the application. If you have a database tool that does not support key transfer, or a third-party application, key can be sent throughfbclient.dllwith a special configuration. -

Password input window for

fbclient.dllin Windows and password input on the terminal in Linux.

We can offer examples of client applications in various languages, such as Delphi, NET, Java, PHP, C++, etc., upon request.

1.6.13. Automatic correction of firebird.conf (DefaultDbCachePages)

Incorrect configuration of DefaultDbCachePages in firebird.conf, databases.conf or in database header is a common configuration mistake, which often happens during the migration between versions. For instance, it can be too large values of Page Buffers in database header for Classic or SuperClassic, or too low for SuperServer.

HQbird will automatically fix the wrong setting in firebird.conf and databases.conf and it will overwrite, if the configuration is unsuitable for a selected architecture.

1.6.14. Advanced Monitoring of Performance (trace, MON, locks, CPU, RAM, frequency)

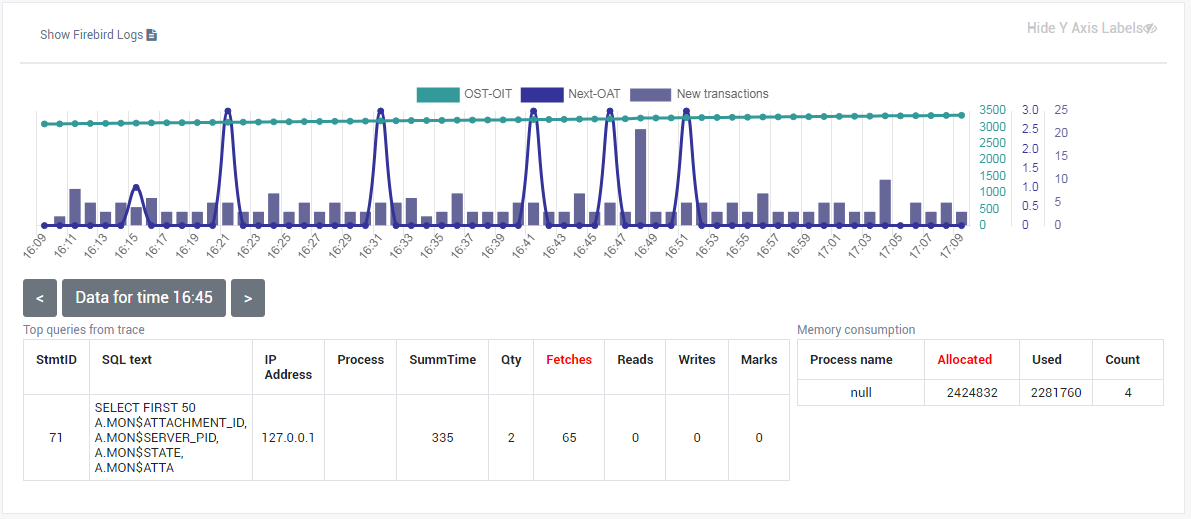

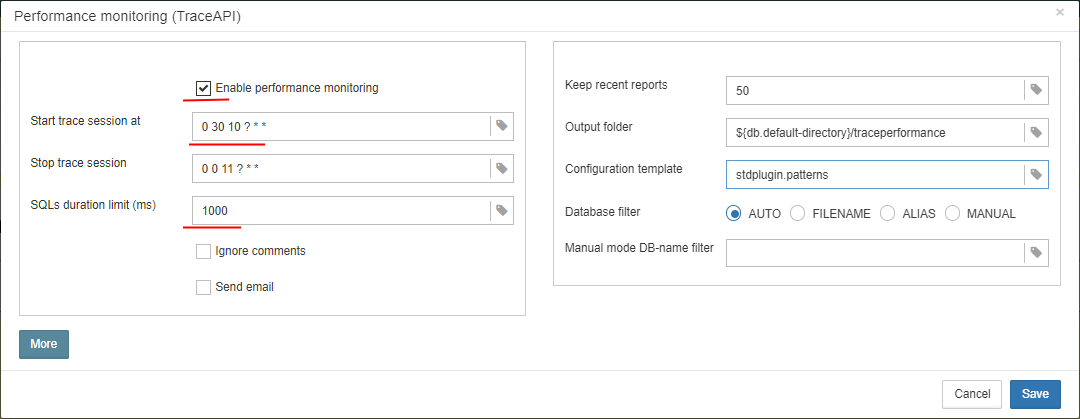

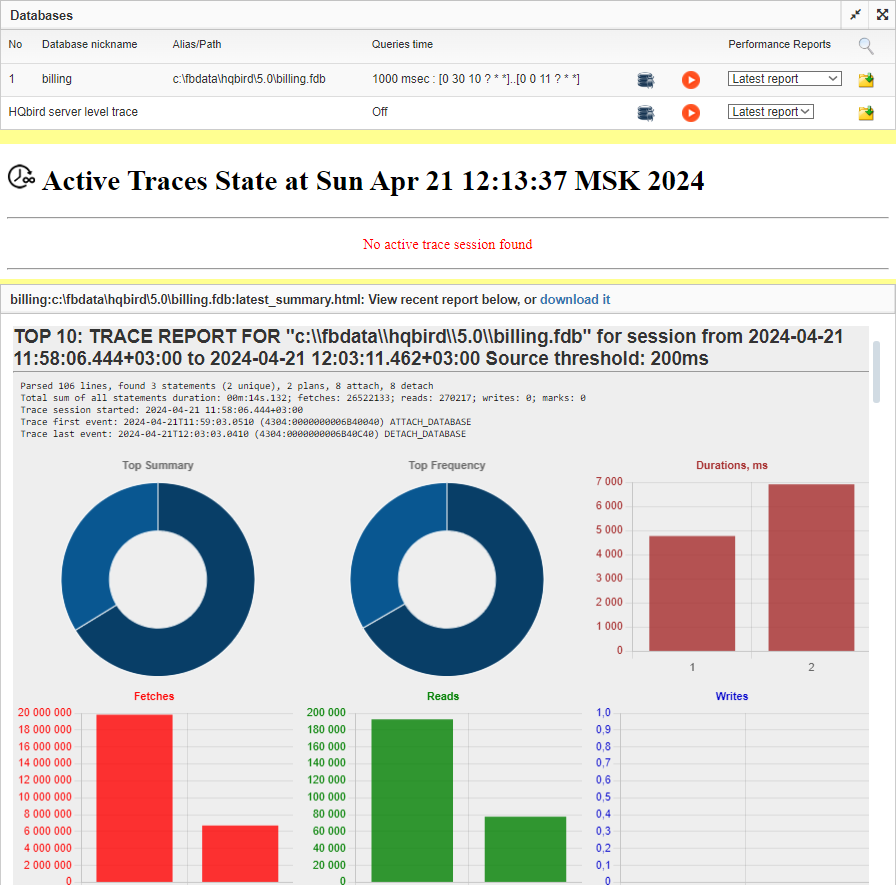

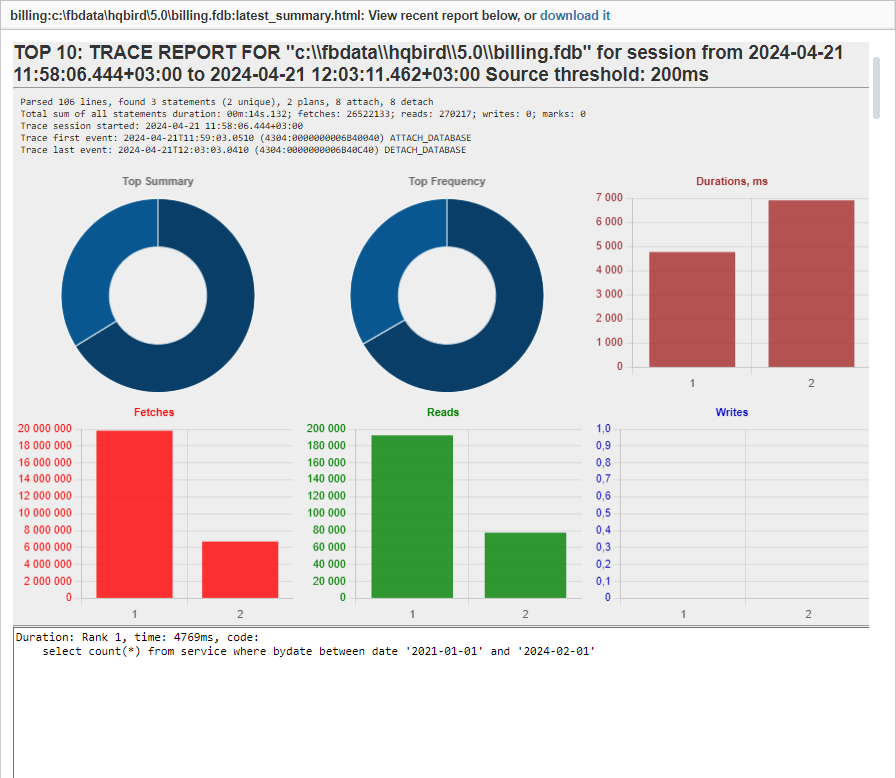

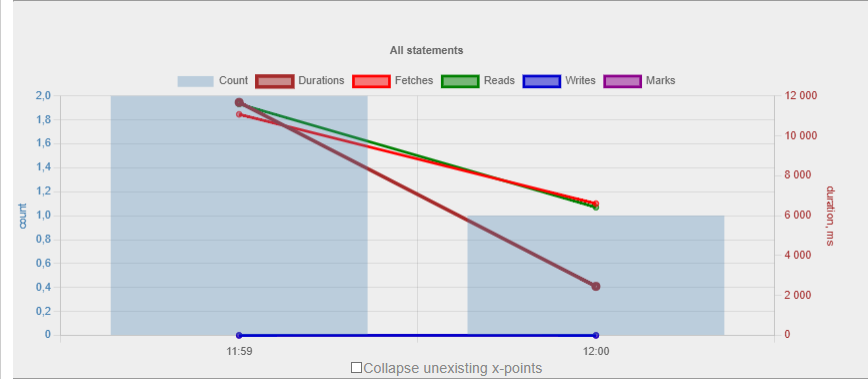

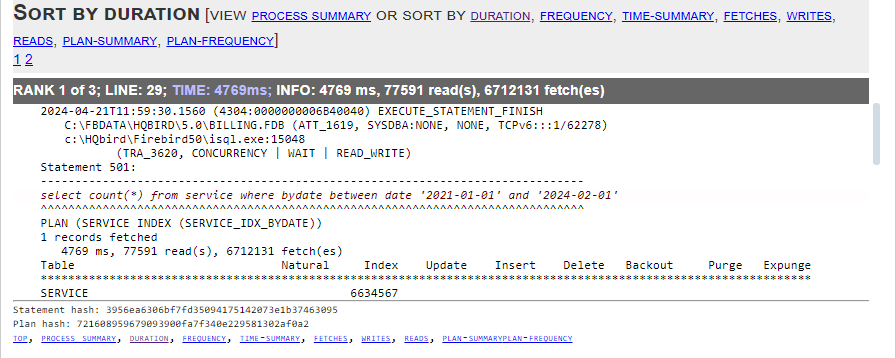

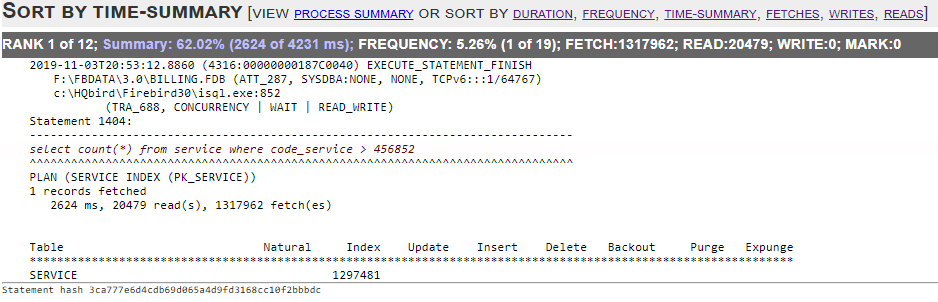

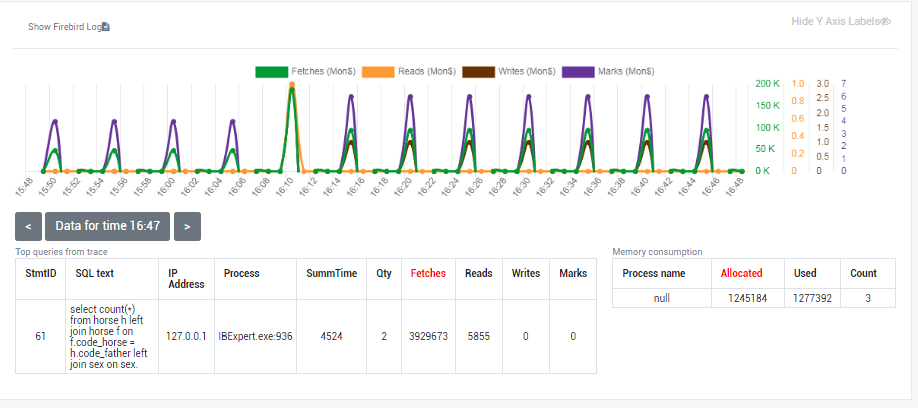

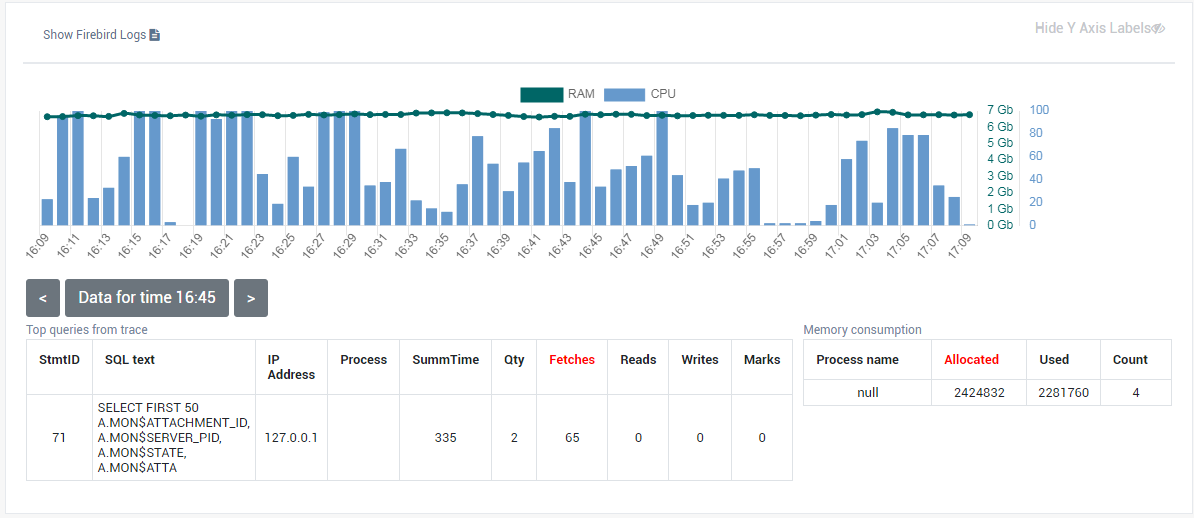

Advanced Monitoring of Performance in HQbird is a feature that allows you to monitor and analyze the performance of your Firebird databases (version 5.0, 4.0, 3.0, 2.5) in real time. It collects data from various sources, such as Trace API, MON$ tables, lock table, transactions, CPU and RAM usage, and displays them in graphical and tabular forms. You can see the overall performance trends, as well as drill down to the details of each minute, query, or transaction.

You can also identify performance problems, such as slow and frequent queries, long-running transactions, lock table spikes, etc., and view their plans and statistics.

-

More details: https://ib-aid.com/monitoring-in-hqbird

1.6.15. Monitoring of queries with large sortings

This feature helps to troubleshoot queries that produce large reports, where many records need to be sorted. HQbird can track queries and operations that create sorting files larger than a given size. When such a query is detected, its text is recorded to firebird.log

Configured as a TempSpaceLogThreshold parameter in firebird.conf, which defines the size of the sorting file for monitoring.

1.6.16. Manage (and configure replication) many databases at once with command-line tools

If you have many databases stored in the folder, and want to register all of them in HQbird to setup replication, in HQbird v2024 there is new command-line command to generate JSON file from the folder (recursive or not) with the registration information, which can be used for mass registration.

From replica side, there is special version of HQBird Central for Replicas, which allows to store hundreds of replicas (from different servers) on the single server. HQbird Central for Replicas is shipped by request.

1.6.17. Backups, Restore, and Automatic Backup/Restore

-

Backups: HQbird implements all types of backups with sophisticated or simple scheduling (all can be done online, with connected users):

-

Verified backup with

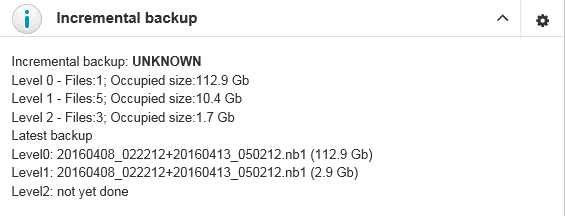

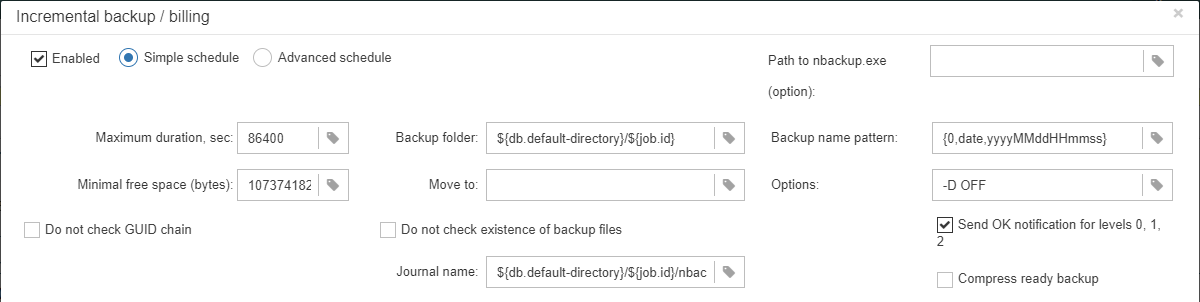

gbak.exe. The traditional Firebird backup format when Firebird reads every record in the database, guaranteeing that database is healthy. In HQbird (versions 2.5-5.0) verified backup is very fast due to multi-thread support. HQbird implements rotation of verified backups, compression, and test restore. HQbird calculates necessary space for backups to ensure that backup will fit into the free space, and creates detailed logs for all operations. -

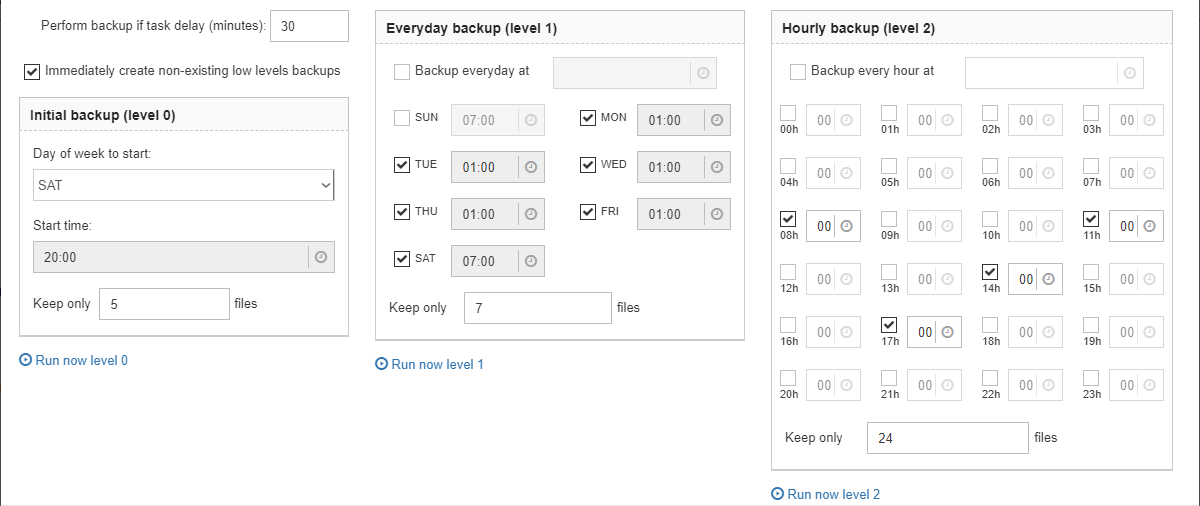

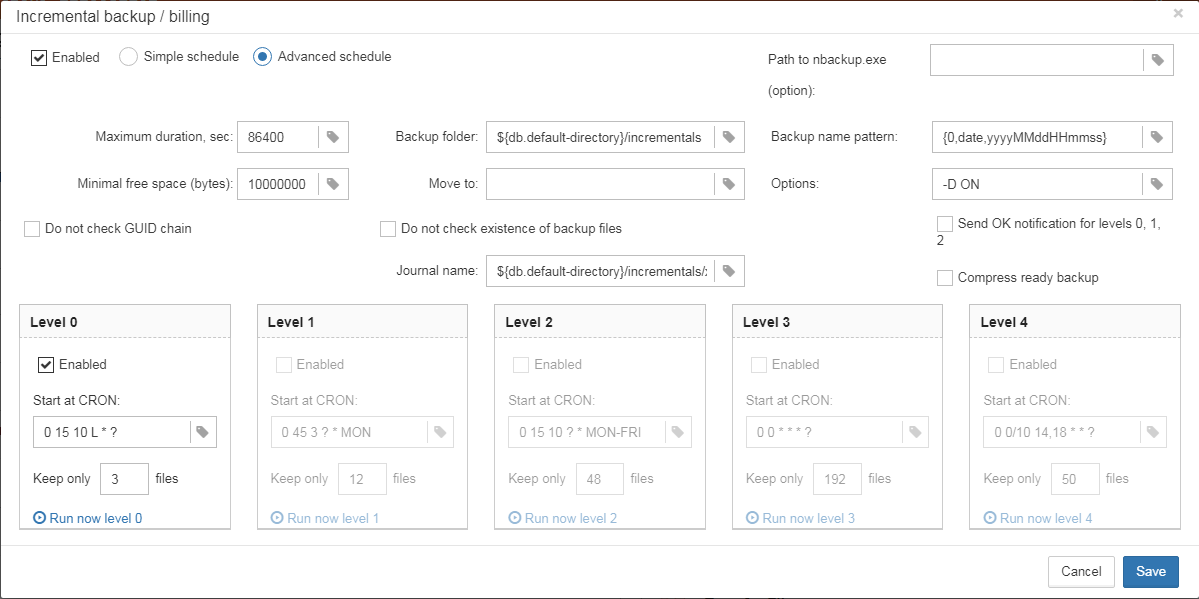

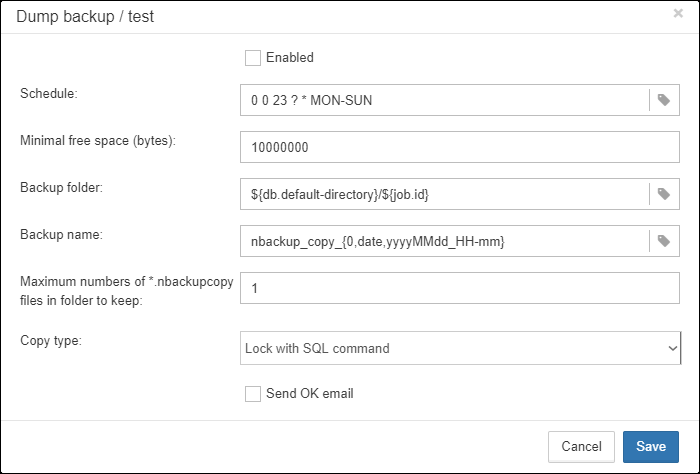

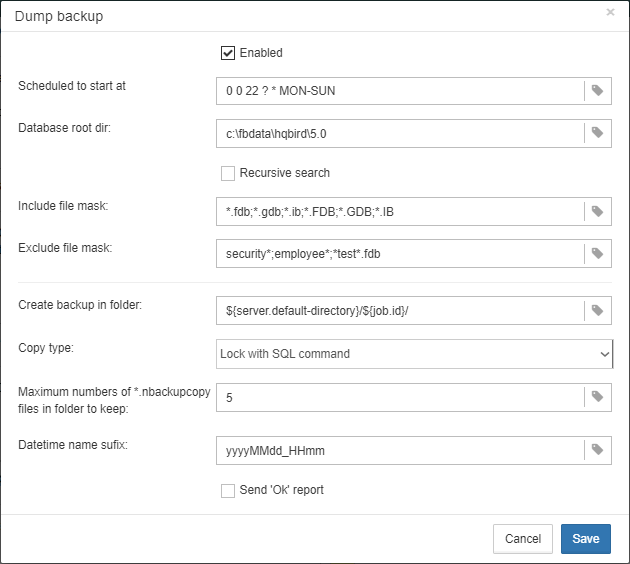

Incremental backup. The fast physical level backup which copies changed data pages. HQbird offers 3 backup schemes: simple weekly 3-levels backup, enhanced multi-level backup (up to 5 levels), and dump backup to create a copy of the database. Backup files are rotated, the necessary space is calculated.

-

-

Restores

-

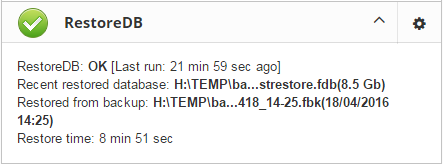

Restore your databases from backups. HQbird allows to restore database from FBK. It is especially important for cloud instances, when FBK is uploaded to the cloud instance, so there is no necessity to connect to server’s console (i.e., ssh or RDP).

-

Test restore, as part of verified backup process. You can opt to perform test of restore of fresh backup, it will be done as a part of verified backup restore process.

-

Scheduled restores. It is possible to organize scheduled restores of verified (gbak) backups and/or incremental (nbackup) backups, for example, as part of backup infrastructure.

-

-

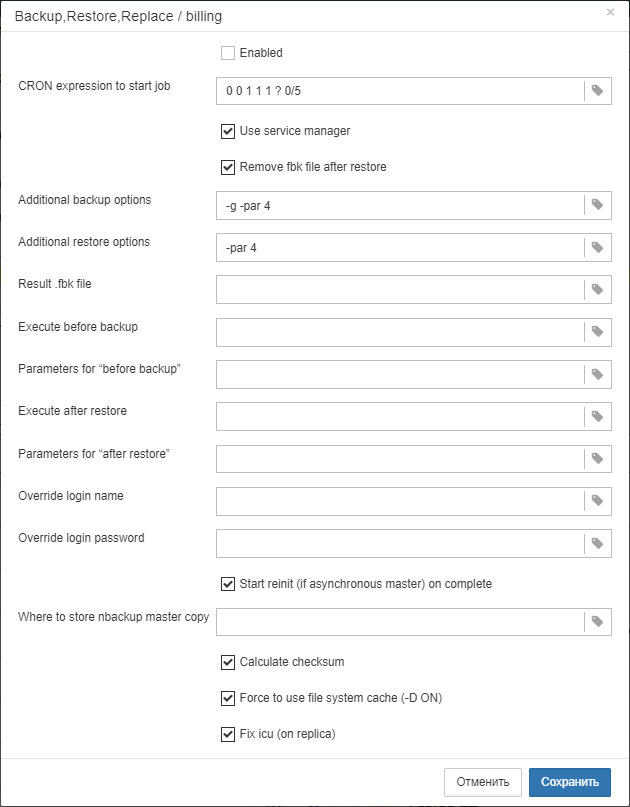

Automatic backup-restore. Support of full backup-restore cycle, both planned and by request. HQbird will do the full backup-restore in the safe and fast manner: stop all users, do backup and restore, enable users. The old copy of the database will be kept. In case of a problem the process will be reverted. If there will be not enough space, backup-restore will not start.

With HQbird, you can always keep track of your backups and avoid losing them, no matter how many databases you have or where they are.

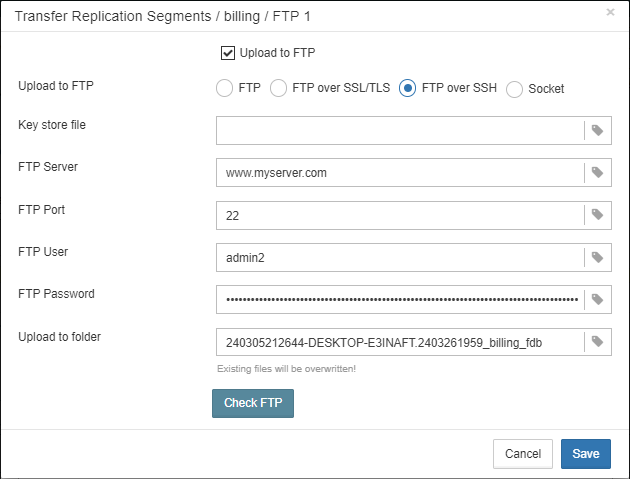

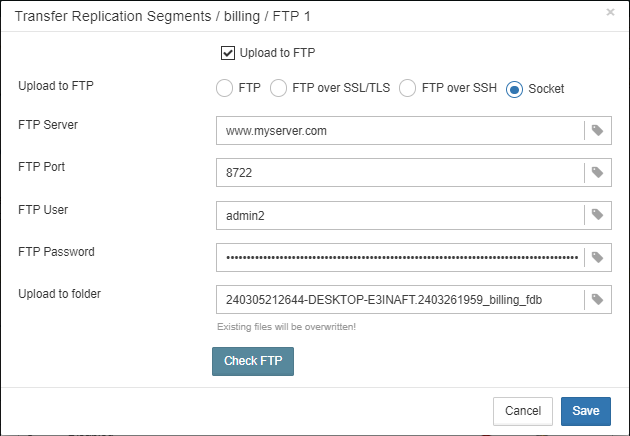

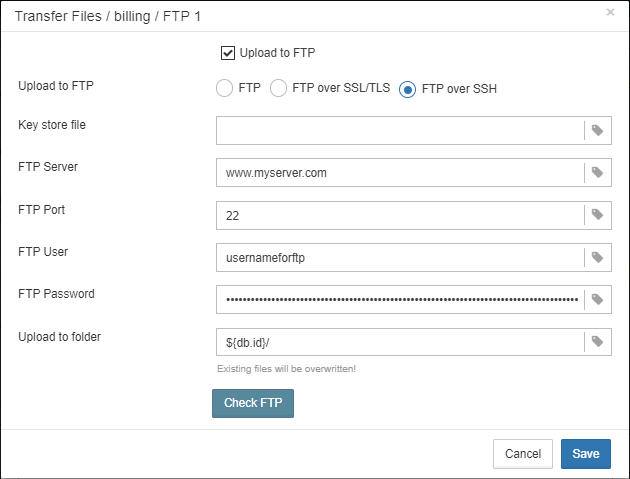

1.6.18. Transfer backups, segments, etc through FTP/sockets/Amazon S3

HQbird can transfer backups (or other files by mask) via FTP, sockets, or to Amazon S3 (needs plugin which is available on demand).

HQbird also has built-in FTP server and sockets server with easy setup.

1.6.19. Advanced maintenance: proper garbage collection and more

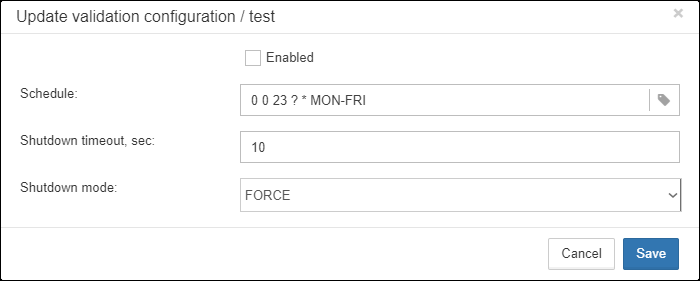

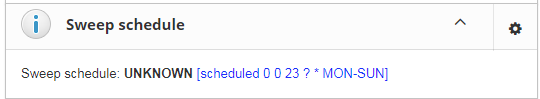

Excessive record versions, also known as garbage versions, slow down Firebird databases significantly. HQbird implements the proper combination of sweep operations and “soft” shutdown of long running writeable transactions, and allows to avoid frequent database backups/restores. With HQbird it is recommended to do backup/restore no more than once per year.

Maintenance can also include the recalculation of indices statistics and the verification of indices health, as well as the examination of metadata health.

1.6.20. Multi-instance support

HQbird allows installation of multiple Firebird instances of different versions on the same server. It makes migration from one version to another easier. HQbird for Windows installs all supported Firebird versions (5.0, 4.0, 3.0, 2.5) by default, each instance with a different port. You can choose to install only one version, or several versions, during the installation.

To install HQbird for Linux with multiple instances, please use united installer (it is a new feature of HQbird v2024), and indicate what versions you want.

1.6.21. Silents installation on Windows and Linux

The fastest way to install HQbird is to use the silent installation in the command line.

In the example below we will install HQbird with Firebird 3.0 into c:\HQbird, the configuration will be c:\HQbirdData\config, output in c:\HQbirdData\output.

HQbirdServer2024.exe /VERYSILENT /SP- /TYPE="hqbird30x64" /DIR="C:\HQbird2020" /CONFIGDIR=C:\HQBirdData\config /OUTPUTDIR=C:\HQBirdData\output

See also:

-

How to setup on Linux: Installing HQbird Server on Linux

-

More details: Silent installation on Windows

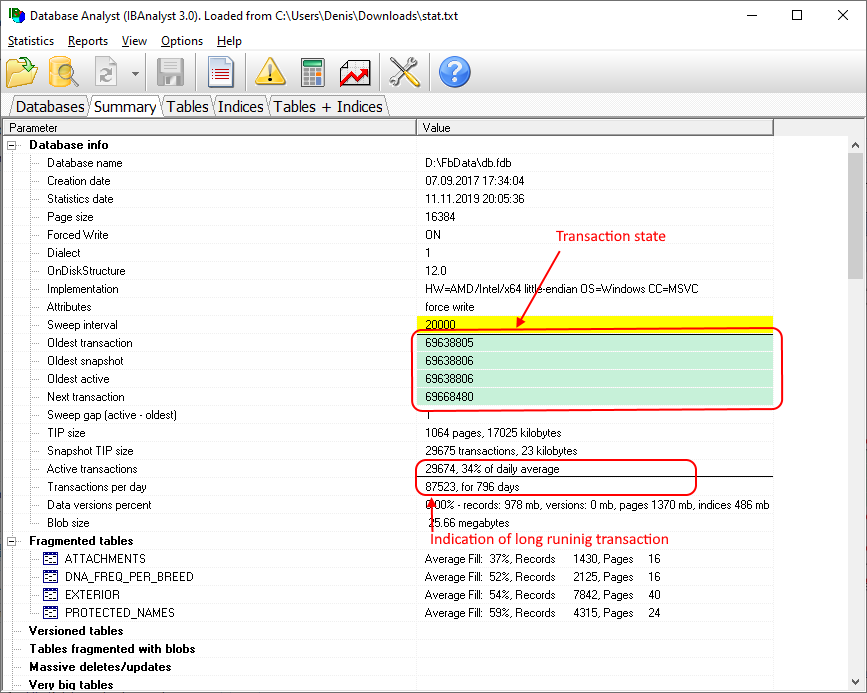

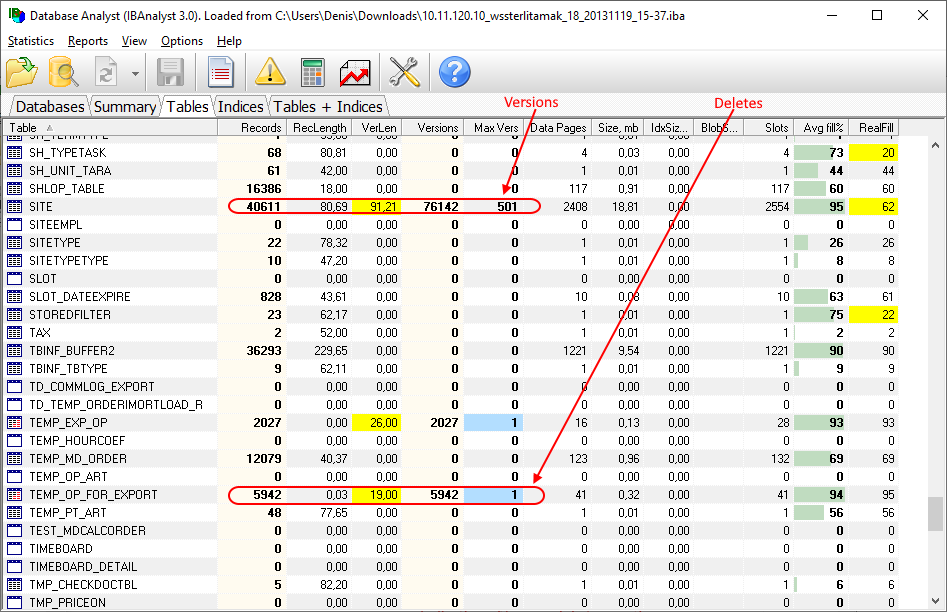

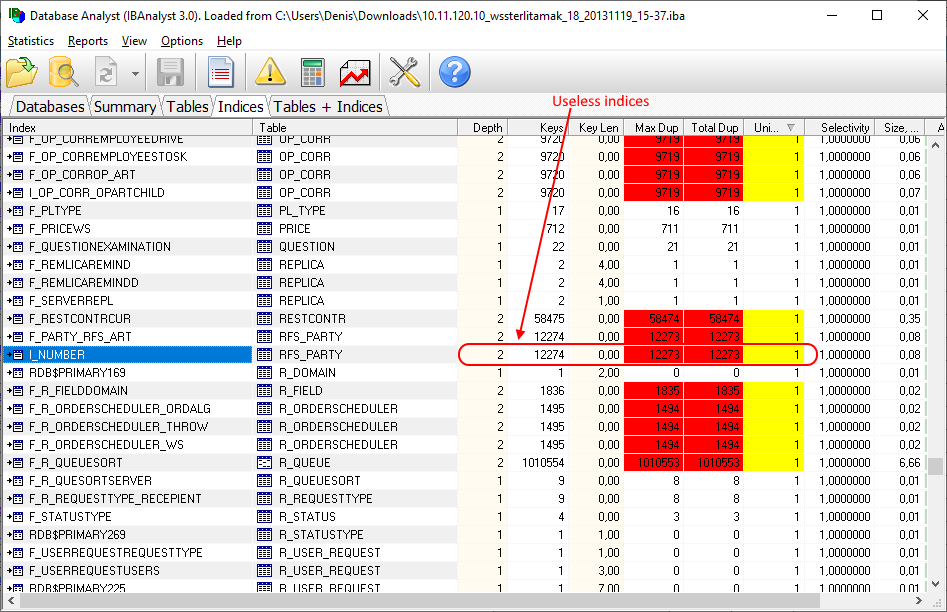

1.6.22. Tool to analyze database statistics

HQbird’s Admin package (it runs on Windows), includes Database Analyst, a tool that assists a user to analyze in detail Firebird database statistics and identify possible problems with database performance, maintenance and how an application interacts with the database. IBAnalyst graphically displays Firebird database statistics in a user-friendly way and highlights the following problems:

-

tables and BLOBs fragmentation,

-

record versioning,

-

garbage collection,

-

indices effectiveness, etc

More details: Database structure analysis

1.6.23. Tool to analyze connections/transactions/memory consumption/IO operations

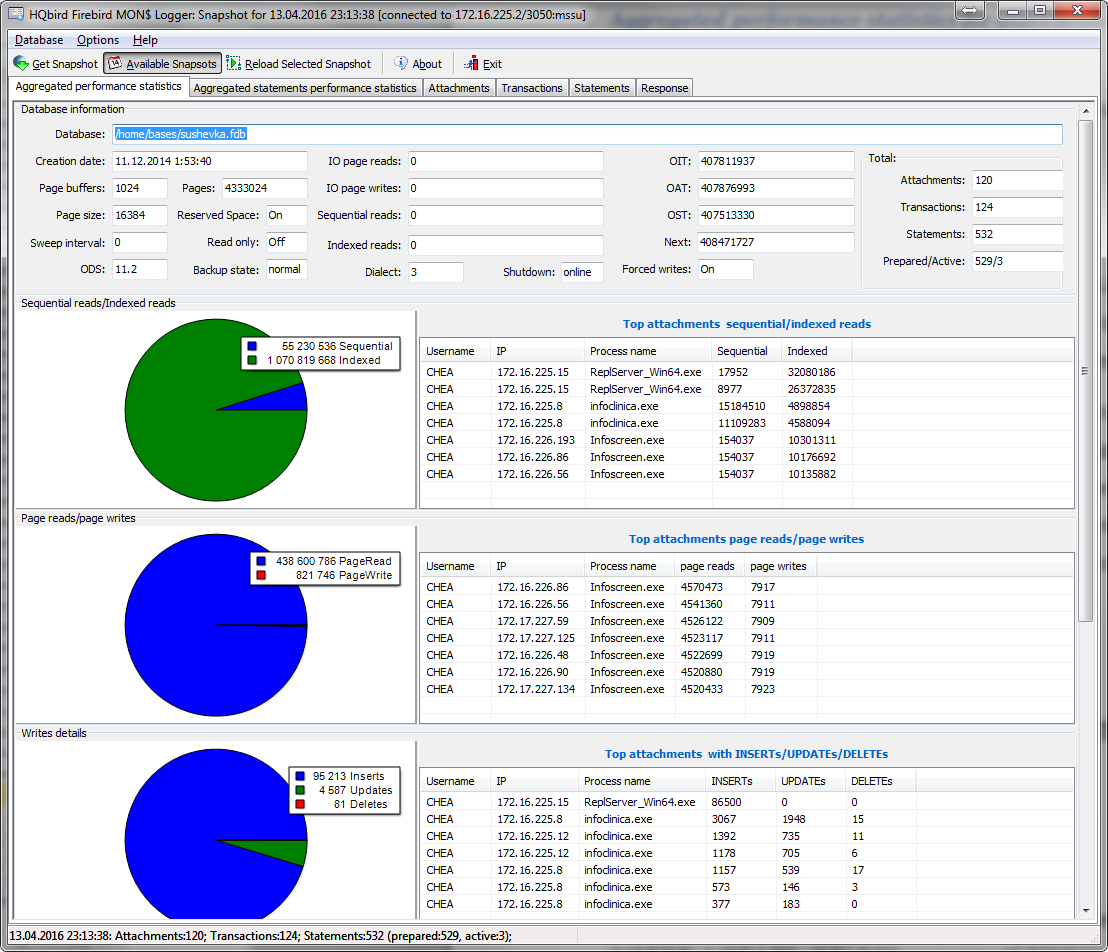

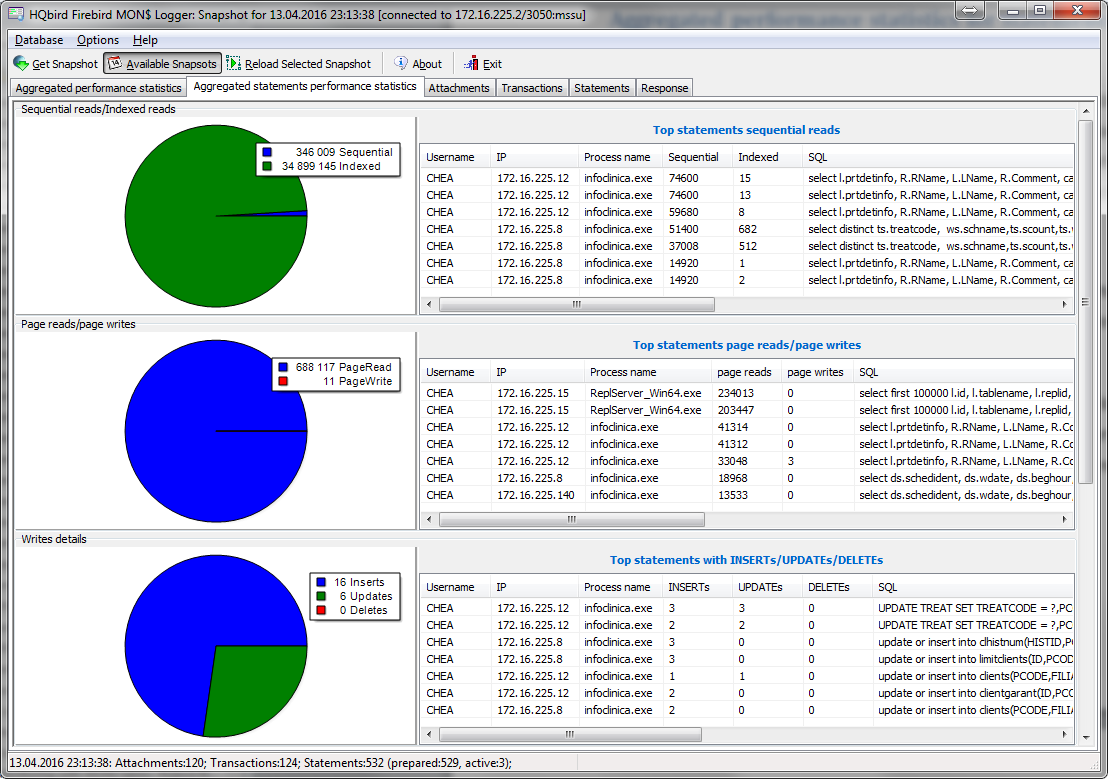

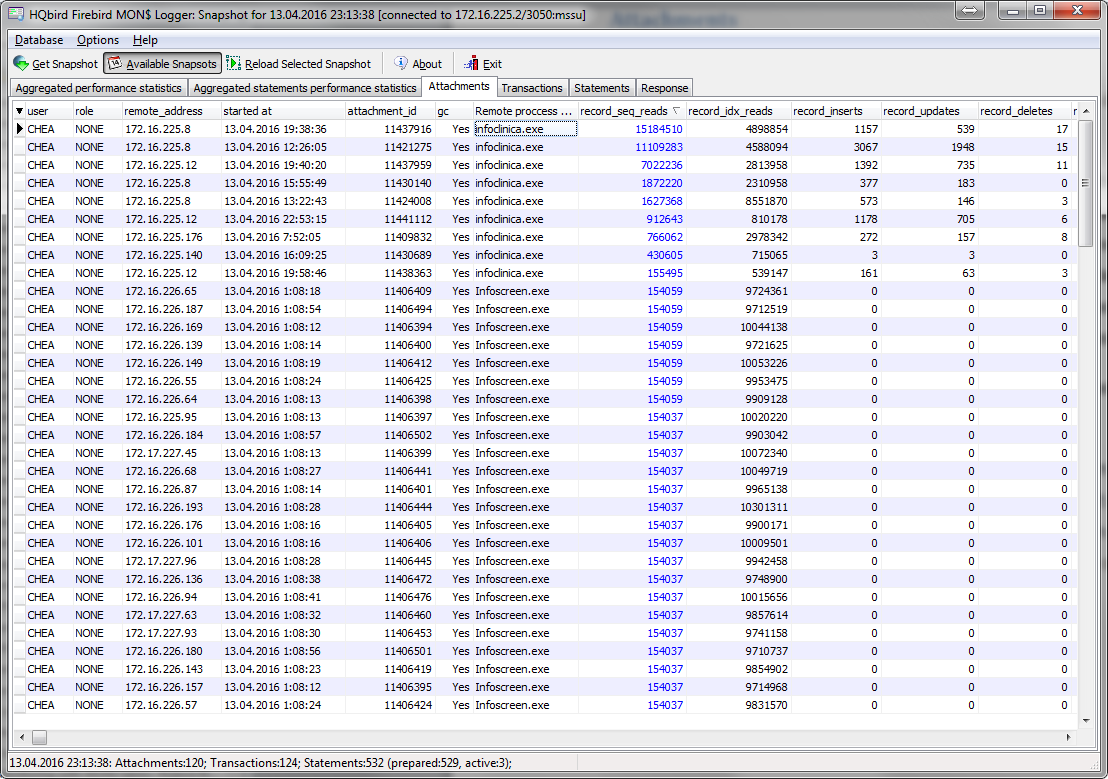

HQbird MonLogger is a tool to analyze monitoring tables output in Firebird and find problems with slow SQL queries, wrongly designed transactions (long-running transactions, transactions with incorrect isolation level, etc) and identify problematic applications.

MonLogger can connect to Firebird database with performance problems and identify what is the reason of slowness: is it some user attachment, slow SQL query or long-running transaction?

MonLogger supports Firebird 2.1, 2.5, 3.0, 4.0 and 5.0 — for older Firebird versions or InterBase please use FBScanner (it is not included in HQbird, should be purchased separately).

MonLogger can show you:

-

Top attachments with highest number of IO operations, non-indexed and indexed reads

-

Top SQL statements with highest number of IO operations, non-indexed and indexed reads

-

Problematic transactions: long-running transactions, transactions with erroneous isolation level, read/write transactions, and related information: when they started, what applications started these transactions, from what IP address, etc

-

Attachments and statements with the most intensive garbage collection actions

-

Read/write ratio, INSERTS/UPDATE/DELETE ratio, and more.

1.6.24. Recovery tools

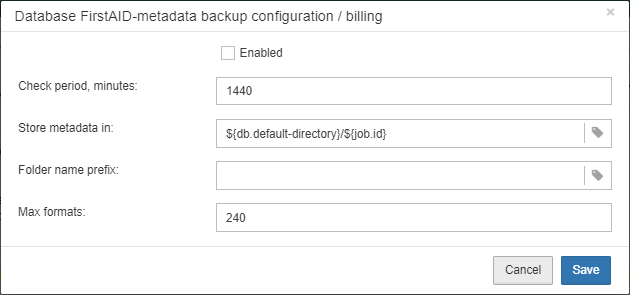

HQbird includes license of FirstAID, recovery toold for Firebird. IBSurgeon FirstAID is the tool that can automatically diagnose and repair corrupted Firebird or InterBase databases — it can recover corruptions that neither gbak nor gfix can fix. Supported versions: Firebird 1.0, 2.0, 2.1, 2.5, 3.0, 4.0, 5.0, InterBase from 4.0 to 2020.

It uses its layer for low-level database access without using the InterBase or Firebird engine, so it can perform real "surgical" operations and repair your database when all other standard mechanisms (gfix and gbak) cannot.

1.6.25. Optimized configurations

HQbird comes with the optimized configuration by default to make the best use of resources of powerful servers and Virtual Machines. To improve HQbird configuration, you can use Cofiguration Calculator for Firebird, where you can choose “HQbird”, to obtain the basic optimized configuration for your system here: https://cc.ib-aid.com/democalc.html.

Please note that Calculator produces conservative configurations, and to create customized configuration, you need to monitor and analyze performance logs. IBSurgeon can assist you to create the ideal configuration in the context of Optimization/Configuration/Audit Incident for Firebird: https://ib-aid.com/en/firebird-interbase-performance-optimization-service/

2. Installation of HQbird

HQbird contains 2 parts: Server and Admin. Let’s consider how to install them.

2.1. Installing HQbird Server on Windows

HQbird Server 2024 includes Firebird 2.5, 3.0, 4.0 and 5.0 with replication, multi-thread support and other enhancements as part of its installer, so Firebird must be installed as part of HQbird.

It is mandatory to install Firebird bundled with HQbird Server installer, if you plan to use replication (it also requires HQbird Master license, Replica or Trial) and other enhancements.

Optionally you can choose to not install Firebird binaries shipped with HQbird – in this case, make sure that installed version is compatible (2.5.x, 3.0.x, 4.0.x, 5.0.x).

Please note, that only Firebird 2.5, 3.0, 4.0 and 5.0 are fully supported in HQbird. We offer the comprehensive Firebird migration service with guaranteed and fast result to migrate Firebird to the latest version.

2.1.1. Silent installation on Windows

The fastest way to install HQbird is to use the silent installation command.

In the example below we will install HQbird with Firebird 5.0 into c:\HQbird2024, configuration will be c:\HQbirdData\config, output in c:\HQbirdData\output.

HQBird2024.exe /VERYSILENT /SP- /TYPE="hqbird50x64" /DIR="C:\HQbird2024" /CONFIGDIR=C:\HQBirdData\config /OUTPUTDIR=C:\HQBirdData\output

The following parameters are mandatory to perform the silent installation:

-

/VERYSILENT /SP- options to perform the silent installation -

/TYPE– what HQbird version should be installed. If you are doing silent upgrade, make sure the version is the same as it was installed previously.-

"HQBird25x64" - "HQbird (with Firebird 2.5 x64)";

-

"HQBird30x64" - "HQbird (with Firebird 3.0 x64)";

-

"HQBird40x64" - "HQbird (with Firebird 4.0 x64)";

-

"HQBird50x64" - "HQbird (with Firebird 5.0 x64)".

-

-

/DIR- where to install HQBird. If you are doing silent upgrade, make sure the version is the same as it was installed previously. -

/CONFIGDIR– where to store configuration data for HQbird. -

/OUTPUTDIR– where to store output data (default location for backups, performance reports, etc).

Optional parameters for the silent installation of HQbird:

-

/fbport=3050- port for Firebird to be installed with HQbird -

/LOG=C:\temp\HQBirdServerSetup.log- where to store installation log -

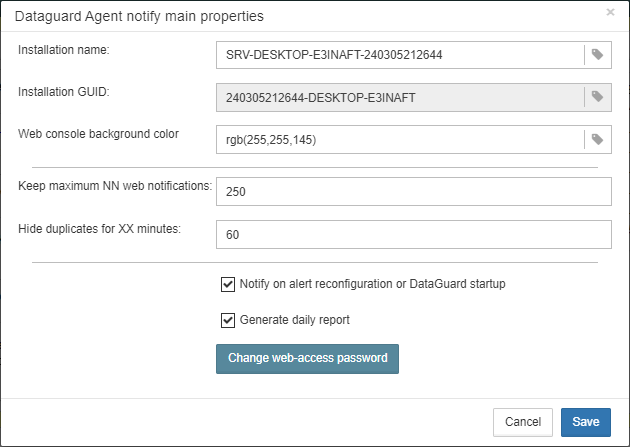

DataGuard parameters:

-

/DGPORT=8082– port for web interface of HQbird (FBDataGuard) -

/DGLOGIN=admin– login for web interface of HQbird (FBDataGuard) -

/DGPASSWORD=strong password– password for web interface of HQbird (FBDataGuard) -

/DISSVC- disable all installed services (use function IsDisableServices) -

/DISMONSVC- disable installed services except DG (use function IsDisableMonServices)

-

-

Automatic registration parameters:

-

/REGEMAIL=[email protected]- email to perform the automatic registration of HQBird -

/REGPASS=yourpassword– password from IBSurgeon Deploy Center account to register HQbird -

/REGTYPE=R|M|T== Replica, Master, Trial – license type, must be specified if you need to register HQbird during the installation

-

-

Offline registration (incompatible with REG **)

-

/REGUIK=<uik filename> -

/REGUNLOCK=<unlock filename>

-

Must be set in pairs, both are required!

/REGUIK="z:\HQBird\test\uik" /REGUNLOCK="z:\HQBird\test\unl"

-

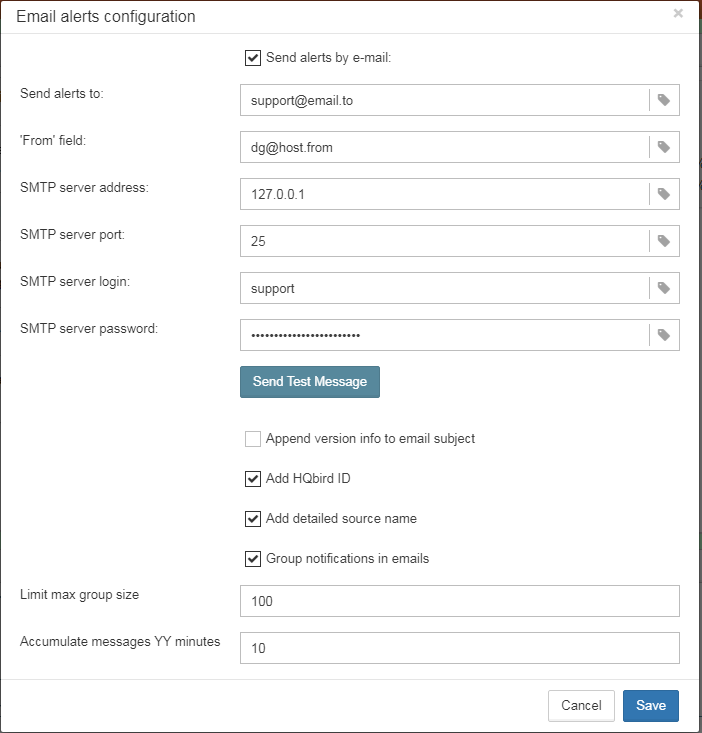

Email alerts parameters:

-

/EAHOST=smtp.company.com– SMTP server for email alerts -

/EAPORT=25– SMTP port for email alerts -

/EALOGIN=support– SMTP login to send email alerts -

/EAPASSWORD=psw– SMTP password to send email alerts -

/EATO=[email protected]– where to send email alerts -

/EAFROM=[email protected]– from address -

/EAENABLED=true– enable or disable email alerts -

/EADEFALT=true– send a copy of email alerts to IBSurgeon Control Center

-

-

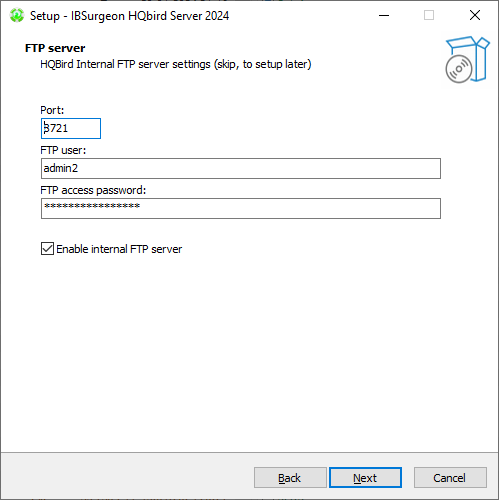

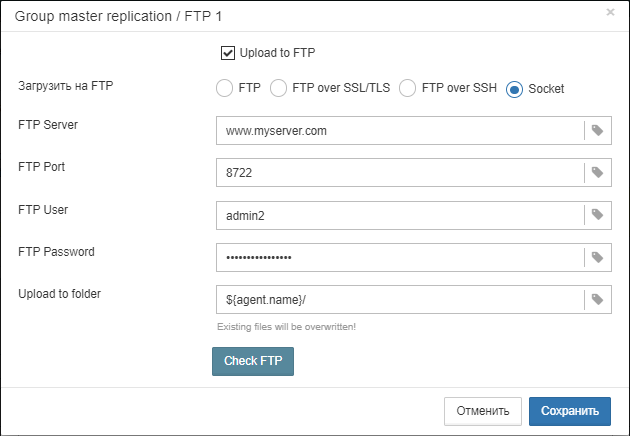

Built-in FTP server parameters:

-

/FTPENABLED=true– enable or disable FTP server -

/FTPPORT=8721- FTP port -

/FTPLOGIN=admin2- FTP login -

FTPPASSWORD=strong password2- FTP password

-

Please note, that in a case of error, for example, if you are trying to run silent installation to install HQbird to the location which is different from the current location, the error message window will popup and installation will be canceled.

2.2. Installing HQbird Server for Windows using the installer

Download HQbird from https://ib-aid.com/en/download-hqbird

The HQbird server distribution contains only 64-bit versions of the Firebird engine; 32-bit versions of Firebird are provided upon user request.

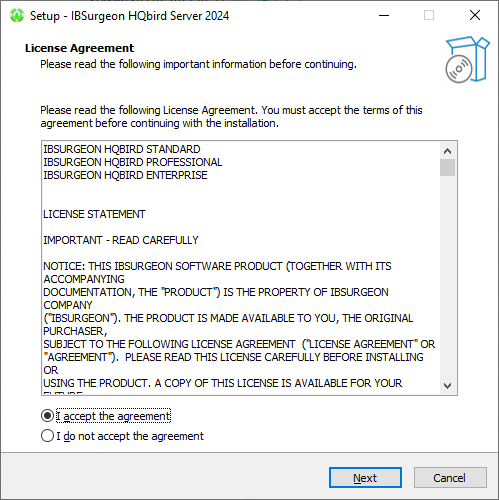

Make sure that HQbird installer is signed with valid IBSurgeon certificate («iBase LLC») and run it:

The HQbird Server Side installation wizard will be launched after that and it will take you through several steps, such as agreeing to the license agreement and selecting the installation folder.

At first, the installer will ask you where to install HQbird:

We recommend to use the default location c:\HQbird, but you can use any suitable location.

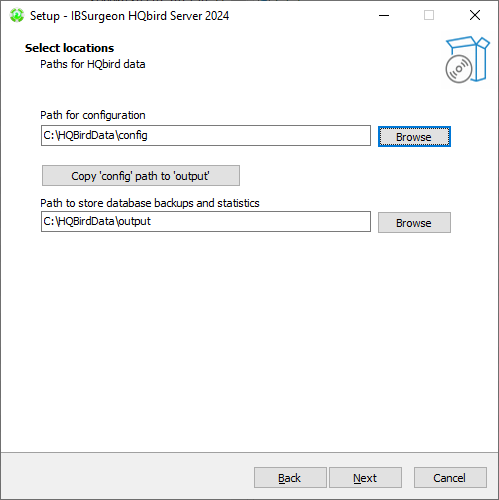

After that, you should select folders for storing configuration files, backup copies of databases, statistics and HQbird log files:

By default, the installation wizard offers to create folders for configuration and log files in C:\HQbirdData.

|

Usually, we recommend selecting a disk with a large amount of free space for this purpose, but you can configure it later. |

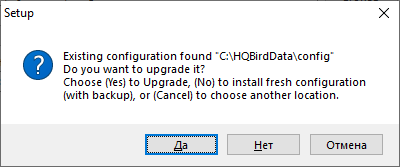

If configuration files already exist in the selected location, the installation wizard will display the corresponding warning:

We recommend the automatic upgrade, so default answer should be Yes.

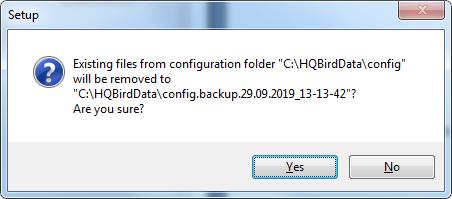

However, you can choose to create fresh configuration of HQbird, and click No – it this case the installer will warn you that existing configuration files will be moved:

In case of choosing Cancel, you need to specify the different location for the configuration and output/backup files.

After you confirm it, the folder with the existing configuration files will be renamed and the installation will continue.

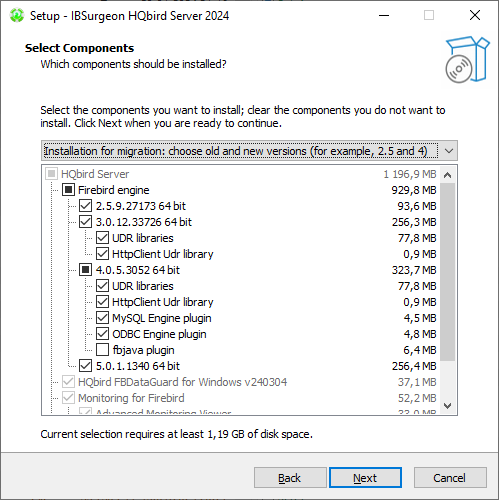

After that, you will see the installation step where you can select components to be installed:

We recommend that you install all HQbird components and Firebird, to avoid further configuration. All HQbird modules are installed in the inactive mode and do not affect the operation of the Firebird server until they are configured or used.

If you choose to install HQbird with Firebird, by default each version of Firebird will be installed in a subfolder of the HQbird installation. By default for each version of Firebird:

-

C:\HQbird\Firebird25 -

C:\HQbird\Firebird30 -

C:\HQbird\Firebird40 -

C:\HQbird\Firebird50

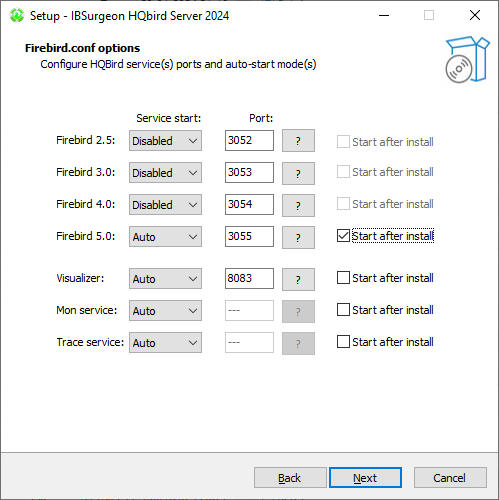

The installation wizard will then ask you to specify the port for each version of Firebird installed alongside HQbird:

If the port is occupied by another running Firebird, the installation wizard will warn you and prompt you to select a different port. Or you can stop and remove another Firebird service.

Here you can select services that will start automatically when the system starts.

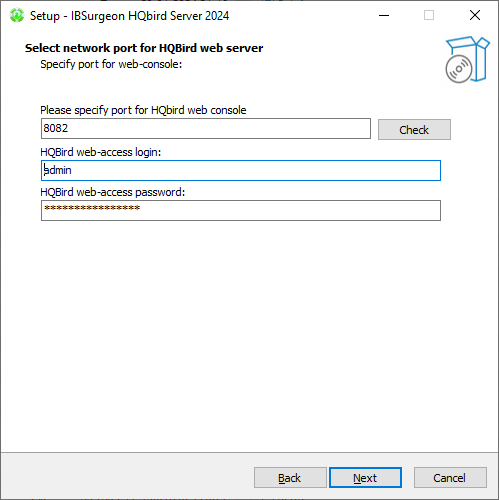

Then, you will be asked to specify the port for HQbird FBDataGuard (web interface to manage HQbird):

We recommend to keep 8082, but sometimes this port can be occupied, so you can change it.

Default password: strong password

In the next step, you can set the built-in FTP server settings.

Default password: strong password

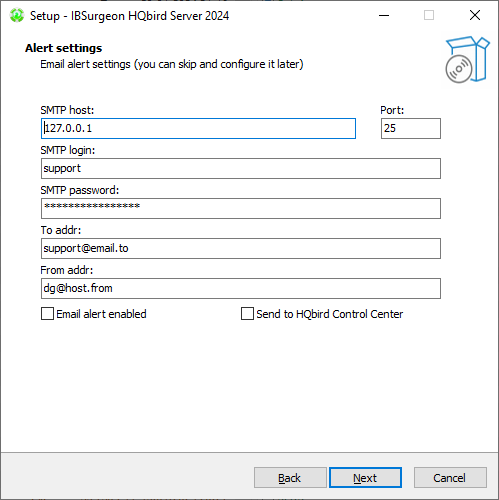

After that, the installer will ask about email settings to be used to send email alerts:

|

You can skip this step: all email alerts can be set later in web interface. |

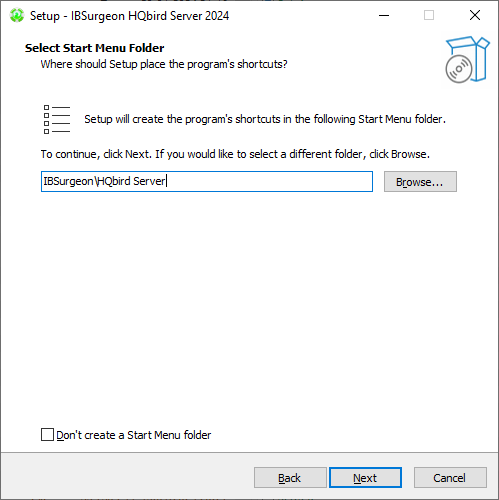

Then, you can specify the folder name and location in Windows menu:

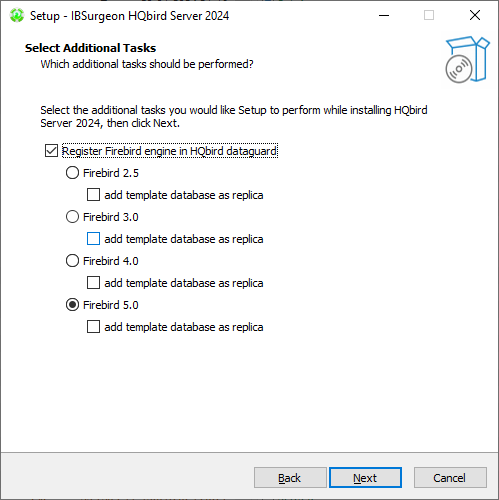

At the next step the installer will offer you to pre-configure HQbird to be used as master or replica server:

You can skip this step, this configuration can be done later.

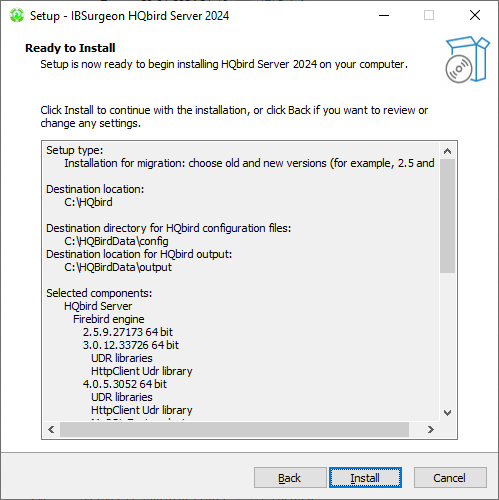

The final step is a summary of components to be installed and their paths:

After that you have to activate HQbird (see How to Activate HQbird) and proceed to configure the HQbird components.

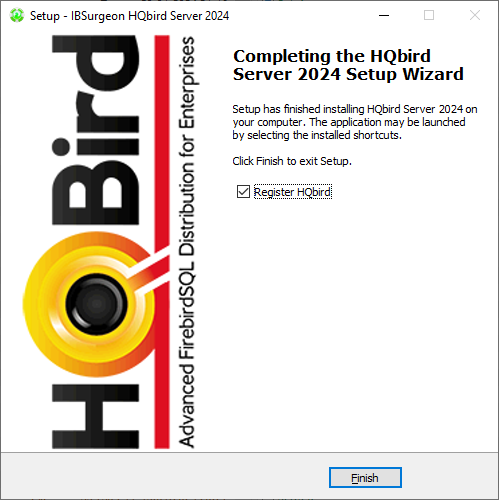

At the end of installation process, you will be asked about next steps:

2.3. Installing HQbird Administrator on Windows

To install HQbird Administrator, download the distribution package from link: https://ib-aid.com/en/hqbird/, or from your account at http://deploy.ib-aid.com.

The name of HQbird Administrator package is HQbirdAdminNNNN.exe (it is in the zip archive).

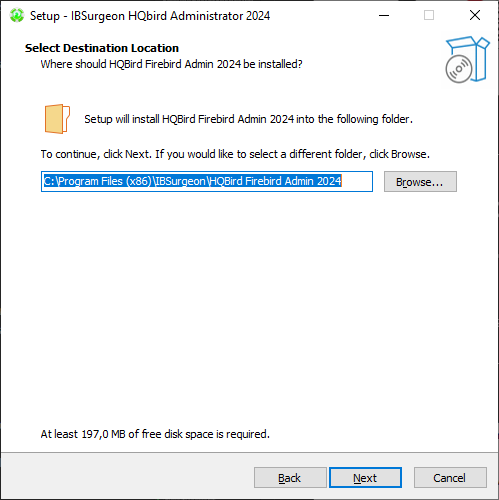

Run the installation wizard and follow the standard installation steps: digital signature check, license, then select the installation folder:

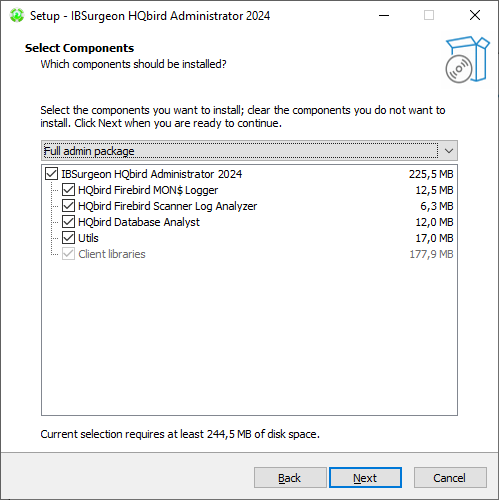

Select tools to install after that. We recommend that you install all tools.

Follow the instructions after that. After the installation is over, you will be offered to launch the activation wizard. If you are installing HQbird Admin on the same computer where HQbird Server was already installed, the license will be automatically detected by HQbird Admin tools.

2.3.1. How to install community version of Firebird on Windows

The easiest way is to install Firebird bundled with HQbird – just choose the desired version during the installation. However, sometimes it is necessary to use HQbird with a community version of Firebird.

|

Please note – to enable replication and performance features in HQbird you need to install Firebird bundled with HQbird ServerSide. |

To install Firebird separately, download the Firebird zip archive from www.firebirdsql.org

Unpack the archive file to a suitable location (for instance, C:\Firebird25), after that copy the optimized configuration file firebird.conf (see Optimized Configurations below) to this folder.

Then, go to the Bin folder and then use the Run As Administrator option to run the batch file with the architecture you need.

-

For Firebird 2.5 – run

install-superclassic.bat. -

For Firebird 3.0 and higher – set parameter

ServerMode=Superand runinstall_service.bat.

Of course, you can choose the SuperServer for 2.5 or Classic architecture for 3.0 if you know what you need.

As a result of running the command file, Firebird of the selected architecture will be installed and run as a service.

You can make sure the Firebird service is installed and running in the Services snap-in (services.msc in command prompt):

In this example, Firebird is installed in the folder H:\Firebird\Firebird-2.5.5.26928-0_x64 and running as a service with the SuperClassic architecture.

2.4. Installing HQbird Server on Linux

To install HQbird Server Side on Linux, you need to download HQbird ServerSide for Linux with built-in Firebird of the appropriate version from the link https://ib-aid.com/en/download-hqbird/

Depending on your version of Firebird, you need to download one of 4 files:

-

install_fb25_hqbird2024.sh -

install_fb30_hqbird2024.sh -

install_fb40_hqbird2024.sh -

install_fb50_hqbird2024.sh

You must be root or sudoer to install HQbird on Linux!

General prerequisites: install java version 1.8 before installing HQbird! We recommend OpenJDK, but Oracle’s Java is also fine.

2.4.1. Installation of HQbird with Firebird 2.5 on Linux

-

Uninstall all previously installed Firebird versions before running this installer. Make sure you don’t have Firebird installed from repositories!

-

Apply execution rights to the installation package:

chmod +x install_fb25_hqbird2024.sh -

Run installation script

install_fb25_hqbird2024.sh. It will install Firebird into/opt/firebirdand HQbird into/opt/hqbird -

By default, Firebird 2.5 is installed as Classic. We recommend to install it as SuperClassic – for this run script

/opt/firebird/bin/changeMultiConnectMode.shand choose thread

Next steps:

-

Please note that Firebird 2.5 will be installed with SYSDBA/masterkey

-

You can stop/start Firebird 2.5 with command

service firebird stoporservice firebird start. Check is it running with commandps aux | grep firebird -

You can stop/start HQbird with command

service hqbird stoporservice hqbird start. Check is it running with commandps aux | grep dataguard -

Run browser, and log in to HQbird FBDataGuard http://serverurl:8082, with user/password = admin/strong password

-

Choose “I have HQbird” and register HQbird with the email and password you have received from IBSurgeon Deploy Center.

-

If necessary, follow steps to setup — or see the appropriate chapter of this Guide.

2.4.2. Installation of HQbird with Firebird 3.0 on Linux

Prerequisites: make sure you have libtommath, libncurses5-dev and ICU installed (there will be an appropriate error message if they are not installed).

-

Uninstall all previously installed Firebird versions before running this installer

-

Apply execution rights to the installation package:

chmod +x install_fb30_hqbird2024.sh -

Run installation script

install_fb30_hqbird2024.sh. It will install Firebird into/opt/firebirdand HQbird into/opt/hqbird -

By default, Firebird 3.0 is installed as SuperServer. Keep it.

-

Firebird 3.0 will be installed with SYSDBA/masterkey

Next steps:

-

You can stop/start Firebird 3.0 with command

service firebird-superserver stoporservice firebird-superserver start. Check is it running with commandps aux | grep firebird -

You can stop/start HQbird with command

service hqbird stoporservice hqbird start. Check is it running with commandps aux | grep dataguard -

Run browser, and log in to HQbird FBDataGuard http://serverurl:8082, with user/password = admin/strong password

-

Choose “I have HQbird” and register HQbird with the email and password you have received from IBSurgeon Deploy Center.

-

If necessary, follow steps to setup — or see the appropriate chapter of this Guide.

2.4.3. Installation of HQbird with Firebird 4.0 on Linux

Prerequisites: make sure you have libtommath and ICU installed (there will be an appropriate error message if they are not installed).

-

Uninstall all previously installed Firebird versions before running this installer

-

Apply execution rights to the installation package:

chmod +x install_fb40_hqbird2024.sh -

Run installation script

install_fb40_hqbird2024.sh. It will install Firebird into/opt/firebirdand HQbird into/opt/hqbird -

By default, Firebird 4.0 is installed as SuperServer. Keep it.

-

Firebird 4.0 will be installed with SYSDBA/masterkey

Next steps:

-

You can stop/start Firebird 4.0 with command

service firebird-superserver stoporservice firebird-superserver start. Check is it running with commandps aux | grep firebird -

You can stop/start HQbird with command

service hqbird stoporservice hqbird start. Check is it running with commandps aux | grep dataguard -

Run browser, and log in to HQbird FBDataGuard http://serverurl:8082, with user/password = admin/strong password

-

Choose “I have HQbird” and register HQbird with the email and password you have received from IBSurgeon Deploy Center.

-

If necessary, follow steps to setup — or see the appropriate chapter of this Guide.

2.4.4. Installation of HQbird with Firebird 5.0 on Linux

Prerequisites: make sure you have libtommath and ICU installed (there will be an appropriate error message if they are not installed).

-

Uninstall all previously installed Firebird versions before running this installer

-

Apply execution rights to the installation package:

chmod +x install_fb50_hqbird2024.sh -

Run installation script

install_fb50_hqbird2024.sh. It will install Firebird into/opt/firebirdand HQbird into/opt/hqbird -

By default, Firebird 5.0 is installed as SuperServer. Keep it.

-

Firebird 5.0 will be installed with SYSDBA/masterkey

Next steps:

-

You can stop/start Firebird 5.0 with command

service firebird-superserver stoporservice firebird-superserver start. Check is it running with commandps aux | grep firebird -

You can stop/start HQbird with command

service hqbird stoporservice hqbird start. Check is it running with commandps aux | grep dataguard -

Run browser, and log in to HQbird FBDataGuard http://serverurl:8082, with user/password = admin/strong password

-

Choose “I have HQbird” and register HQbird with the email and password you have received from IBSurgeon Deploy Center.

-

If necessary, follow steps to setup — or see the appropriate chapter of this Guide.

2.4.5. Installation of HQbird without Firebird on Linux

If you don’t want to change the existing Firebird installation, please run the following command:

install_fb4_hqbird2024.sh –-nofirebirdIt will install HQbird without Firebird binaries.

|

Please note, that advanced features (replication, multi-thread support, encryption, authentication) require HQbird with Firebird binaries! |

2.4.6. Firewall settings

Make sure these ports are allowed in your firewall configuration:

-

3050 - Firebird;

-

3051 - events Firebird

-

8082 - web-console DataGuard

-

8083 - monitoring

-

8721 - Passive FTP

-

40000-40005 - FTP passive ports

-

8720 - active FTP

-

8722 - socket server

-

8765 - license server

These ports can be changed in /opt/firebird/firebird.conf (RemoteServicePort), /opt/hqbird/conf/network.properties (server.port) and /opt/hqbird/conf/license.properties (serverlicense.port).

Make sure to allow these ports in your firewall configuration.

|

Attention!

After upgrade, make sure that there is only the one copy of HQbird is running! If there are 2 copies, stop them ( |

2.5. Upgrade existing HQbird version

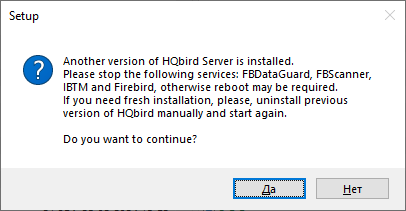

HQbird installer on Windows (from v 2018R2) and on Linux (from v 2018R3) supports automatic upgrade of the configuration of already installed HQbird version 2017R2 and later.

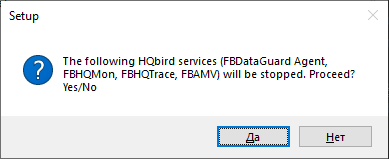

If HQbird installer will notice the previous version of HQbird, it will ask you to confirm the upgrade, and in case of the positive answer, it will stop Firebird, HQbird and upgrade their files.

The configuration will be retained — it means that firebird.conf, aliases.conf or databases.conf, securityX.fdb, and HQbird configuration files will not be deleted (HQbird configuration files will be upgraded to the new configuration version).

The upgrade does not change the Windows service settings for Firebird and HQbird – it means that if you have changed “Run As” properties of the service, they will be retained.

|

After upgrade on Linux Firebird and HQbird must be started manually! |

|

After upgrading HQbird, open the web-console and choose in the right upper corner: “Refresh HQbird web-console”. It is necessary to clean the cache of JavaScript part of the application.

|

Please note — if you are installing HQbird 2024 over the old version of HQbird on Windows, the dialog with installation options will be shown as disabled, because we cannot automatically upgrade from 2.5 to 3.0, 4.0 or 5.0, and installer can only upgrade the same components. If you need a different installation, remove old version of HQbird from the computer prior installing 2024.

2.6. Registration of HQbird

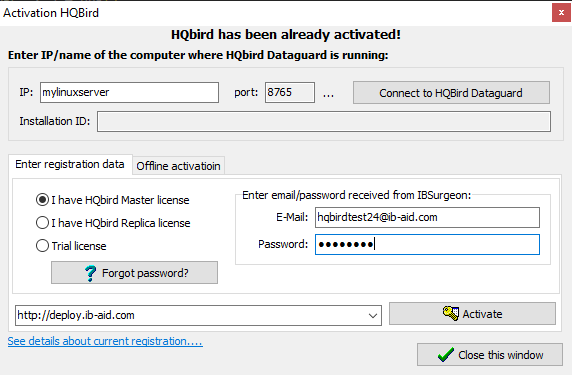

2.6.1. How to activate HQbird

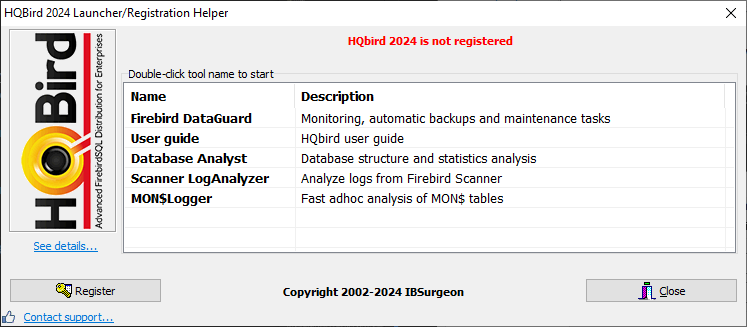

To activate HQbird, you can either use a separate utility included in the server and administrator packages for Windows, or use the registration mechanism embedded into the HQBird Firebird DataGuard web interface (for Windows and Linux), or run any tool from the administrator software and use the built-in activation wizard.

The activation wizard looks and works the same in the tools and in the activation tool. It is enough to perform activation once on any computer that can connect to the server where HQbird ServerSide is installed.

You can launch the registration utility from the Start menu (IBSurgeon\HQbird Firebird Admin\HQbird):

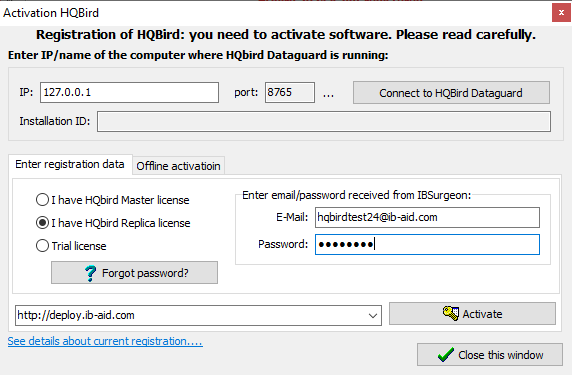

If you click the Register button (or Re-Register for repeated registration), you will see the activation wizard:

After that, specify the IP address or the computer name of the server HQbird is installed on in the upper input field and click Connect to HQbird Server. If you started registration utility on the same computer with HQbird Server, it will be “localhost”, otherwise — some remote address.

Then enter your registration data. If you have a license, enter your e-mail address and password that you used to register with the IBSurgeon Deploy Center and click Activate.

|

If you have no license, choose Trial license, specify your e-mail address and click Activate. You will be automatically registered and the password will be sent to your e-mail address. |

Right after you click Activate, the registration wizard will try to connect to the IBSurgeon Deploy Center () and obtain a license. If it succeeds, you will see the corresponding message. If there are any problems, you will see the error message.

If you forget the password, click the Forgot password… button and it will open the browser with the password recovery form.

If you need to purchase a new or additional license or renew your subscription, click Purchase.

Click Close this window after the registration is over.

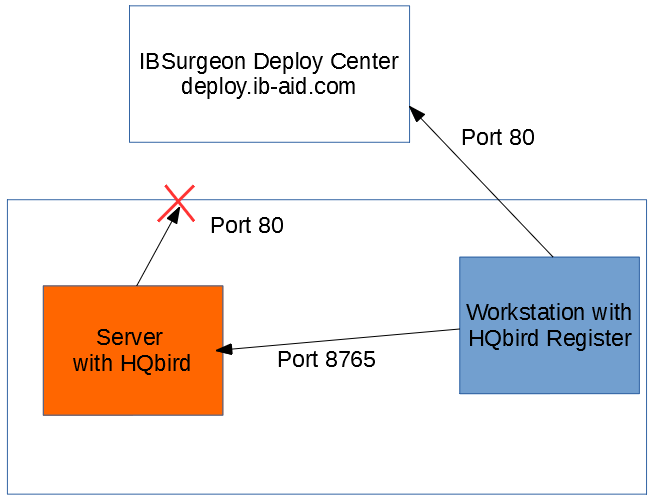

Internet Activation via a Client Computer

If the server with HQbird Server does not have access to the Internet, you can still activate it via the Internet: you can install HQbird Administrator on any client computer with Windows that has both access to the Internet and access to the HQbird Server and perform activation.

Run HQbird Register tool and enter there: IP address of your server (or, server name — for example, mylinuxserver), email and license, and click Activate:

2.7. Configuring firebird.conf for the best performance

HQbird includes set of optimized configuration files for all Firebird versions from 1.5 to 5.0 – they are located in HQbird\Configurations.

If you did not perform a justified tuning of firebird.conf or you are using default firebird.conf, consider to use one of the optimized files from this collection.

There are three variants of Firebird configuration files for every Firebird architecture: balanced, read-intensive and write intensive.

We always recommend to start with balanced firebird.conf.

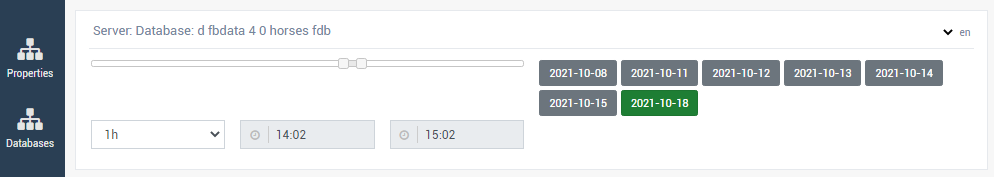

Then we recommend to measure actual ratio between reads and writes using HQbird MonLogger tool (tab “Aggregated

Performance Statistics”). In 90% of cases there are much more reads than writes, so the next step is to try read-optimized firebird configuration file.

Firebird configuration greatly depends on the hardware, so if you want to tune Firebird properly, please also read “Firebird Hardware Guide”, it will help you to understand what parameters must be tuned.

For the deep tuning of high-load Firebird databases IBSurgeon offers Firebird Database Optimization Service: https://ib-aid.com/en/firebird-interbase-performance-optimization-service/

Also, HQbird FBDataGuard analyses the database health and sends alerts with intelligent suggestions to increase specific parameters in firebird.conf, like TempCacheLimit or LockHashSlots.

|

Attention!

If you have specified many page buffers in the header of your database and installed SuperClassic or Classic, it can affect Firebird performance.

To avoid the potential problem, set page buffers in the header of your database to 0, it will ensure that the value from gfix –buff 0 –user SYSDBA –pass masterkey disk:\path\database.fdb |

3. Jobs, monitoring and maintenance configuration in FBDataGuard

Please follow these steps:

-

Make sure that you have Firebird 2.5.5 or later, and it is working;

-

HQbird FBDataGuard service is installed and running

-

You can check it using Services applet in Control Panel (right-click on “My Computer”, choose “Manage”, then “Services and Applications”, “Services” and find in the list “FBDataGuard Agent”)

-

At Linux you can check it with command

ps aux | grep dataguard.

-

-

Make sure the FBDataGuard port is accessible (8082) and it is not blocked by firewall or any other antivirus tools. If necessary, adjust port in FBDataGuard configuration file (see Adjusting web-console port).

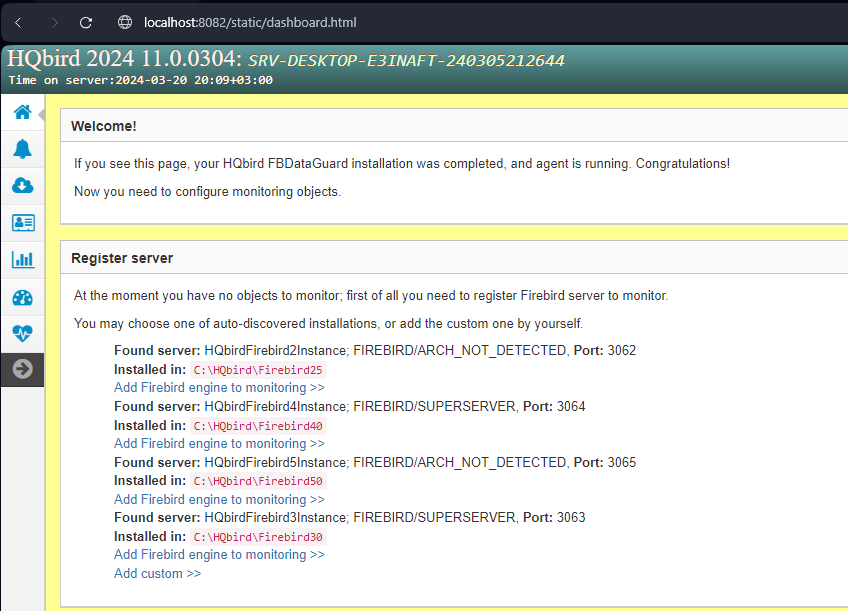

3.1. Launch web-console

To open web-console type in your browser http://localhost:8082 or use IP address of your server with installed HQbird ServerSide.

Or you can choose in “Start” menu .

3.1.1. Supported browsers

FBDataGuard web interface works correctly with Firefox, Chrome, Safari, Microsoft Edge and Internet Explorer 11.

3.1.2. Error message regarding webs-site certificate

If you have configured FBDataGuard to use https, the browser will indicate that this web-site is not safe, and it will recommend leaving web-site. This message is caused by the default security certificate for FBDataGuard web-console.

Please ignore this message and click to open FBDataGuard web-console.

It will ask you for username and password (login dialog can be different for Firefox, Safari or Chrome).

|

Attention!

Default username/password for HQbird FBDataGuard is "admin"/"strong password" (without quotes). |

3.1.3. Auto discovery feature of FBDataGuard

At the first launch FBDataGuard will check computer for installed Firebird servers. FBDataGuard for Windows search registry for Firebird records, FBDataGuard for Linux checks default locations of Firebird installations.

FBDataGuard will show the list of all Firebird copies installed, but only the one instance of Firebird can be monitored by FBDataGuard. Choose it by clicking Add Firebird engine to monitoring >>

If you don’t see Firebird instance in auto discovery list, you can choose Add custom >> and configure instance parameters manually.

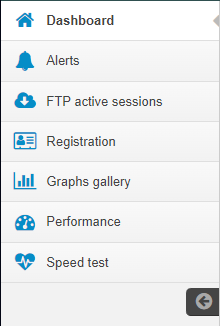

3.2. Overview of web-console.

3.2.1. Parts of web-console.

FBDataGuard Web-console contains 7 tabs (in the left side of the screen, usually they are collapsed):

-

Dashboard — it is the main tab where administrator can configure HQbird FBDataGuard and see server and databases statuses.

-

Alerts — contains the full list of alerts, generated by FBDataGuard.

-

FTP active sessions — display information about active FTP sessions.

-

Registration — license and registration/activation information.

-

Graphs gallery — performance graphs.

-

Performance — performance monitoring settings and performance reports.

-

Speed test

3.2.2. Jobs

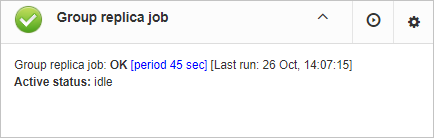

Web-console is intended for easy configuration of activities (called “jobs”) which are fulfilled by HQbird FBDataGuard.

Almost all FBDataGuard jobs have 2 purposes: the first is to monitor for some values and to raise alerts if necessary, and the second is to store historical values into logs, so later it’s possible to see the dynamics of important parameters of Firebird server and database.

In this section we will consider general configuration of jobs parameters, but not an analysis of gathered logs.

3.2.3. Jobs widgets

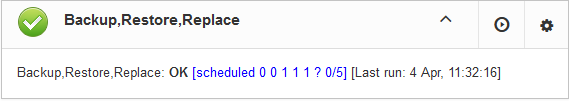

General approach is the following: each activity is represented by a “widget”, which has the following parts:

Status — it is indicated with color icon and name. Status of database is a summary of all included server-level jobs and databases' statuses, and, respectively, status of database is a summary of all database-level jobs.

3.2.4. Status types

CRITICAL means problems, OK means “Everything is fine”, WARNING means some issues which require attention, MAJOR means major issue, MINOR – minor issue, MALFUNCTION means that jobs was not succeeded (something prevents its execution), NOT_AVAILABLE means that job is not available in this server or database version.

OFF means that job is not active, UNKNOWN means that job is active but was not started yet, so actual results are unknown.

Job name indicates the name of activity.

-

Configuration button opens configuration dialog, which is individual for each job.

Configuration button opens configuration dialog, which is individual for each job. -

Starts the job immediately.

Starts the job immediately. -

Resolved is the link to flush the status to UNKNOWN and forgot errors which were discovered previously. The status will be updated according the current situation after the next execution of the job.

Resolved is the link to flush the status to UNKNOWN and forgot errors which were discovered previously. The status will be updated according the current situation after the next execution of the job.

Last run shows the time after the last run of this job.

Period/Schedule to run shows how often or when the job will be started.

More>> is the link which opens the widget and shows more details and suggested action for administrator to resolve the situation.

All jobs in FBDataGuard have default settings, which are very close to recommended values for the 80% of Firebird installations, so after initial configuration server and database will be protected at pretty good level comparing with default installation, however, we recommend additional configuration and tuning for every job. In the next sections we will consider each job and its configuration.

3.3. Firebird server configuration in FBDataGuard

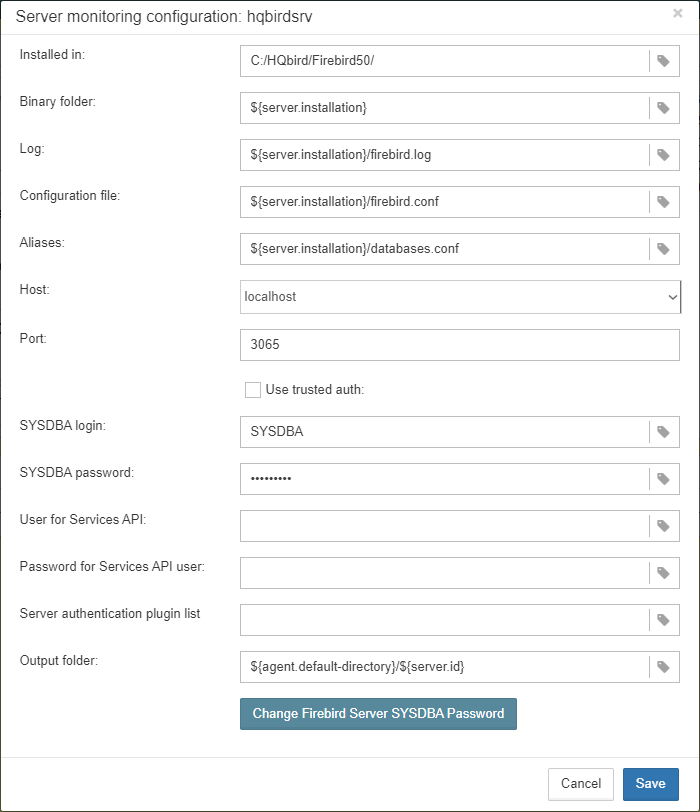

3.3.1. Firebird server registration

To register auto-discovered server, you need to click at Add Firebird engine to monitoring>> and then adjust auto-discovered settings.

|

Note: to use Windows Trusted Authentication (by default it’s off), you need to be sure that libraries |

When installing under Windows, if the option to automatically register the master/replica is selected, the server will be added automatically. In this case, you can skip this step.If the option to automatically register a replica is selected, then the database will be added in addition.

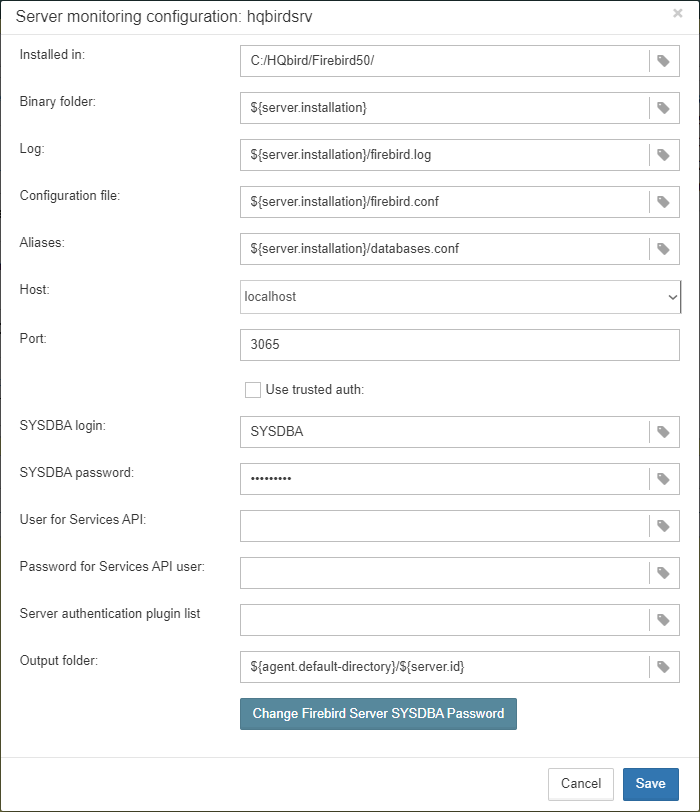

Let’s consider what can you see in the Server dialog (and, normally, you don’t need to change them):

| Installed in |

Firebird installation folder |

| Binary folder |

Firebird bin folder (for Firebird 3 or higher on Windows Binary folder is the same as the installation folder) |

| Log |

location of |

| Configuration file |

location of |

| Aliases |

location of |

| Host |

name of the server, usually |

| Port |

network port for Firebird, according |

| Use trusted auth |

use trusted authentication, by default it is off |

| SYSDBA login |

name of SYSDBA user, usually it is SYSDBA |

| SYSDBA password |

password for SYSDBA |

|

User for Services API

Password for Services API user Server authentication plugin list Output directory |

Folder where backups, statistics and gathered logs will be stored |

By default “Output directory” for Firebird server is ${agent.default-directory}/${server.id}, it corresponds to C:\HQbirdData in case of a default installation.

It can be not very convenient, so we recommend pointing FBDataGuard output directory to more simple path, usually located at disk where backups are intended to be stored, for example F:\myserverdata.

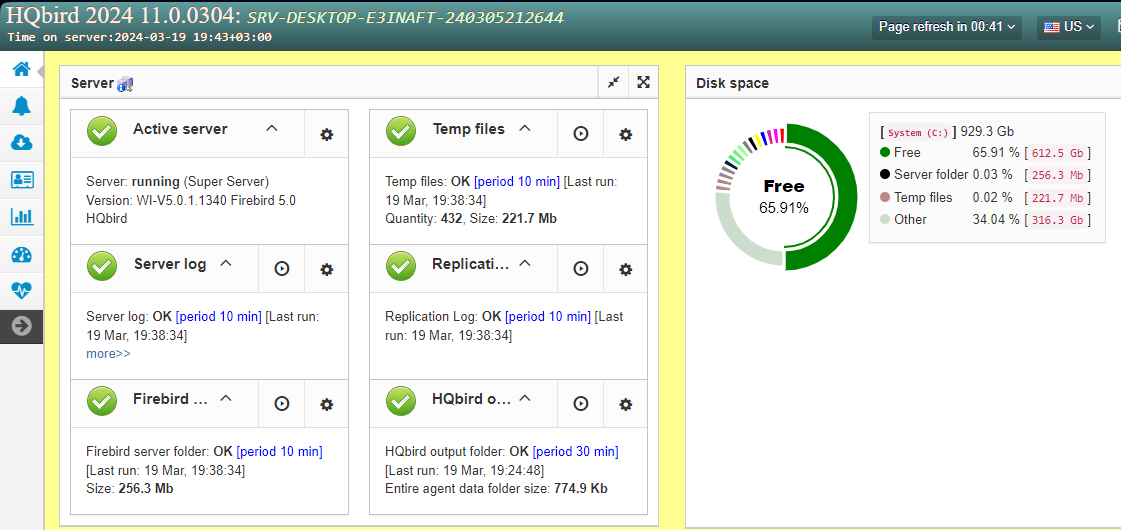

After clicking “Save” FBDataGuard will populate default configurations files and immediately start analysis of firebird.log. It can take a while (for example, 1 minute for 100Mb firebird.log). After that you will see initial web-console with registered Firebird server:

FBDataGuard shows alerts and statuses of monitored objects: if everything is fine, it shows green signs, otherwise there will be yellow or red notifications.

Below we will consider in details each monitored objects and its settings.

|

Note: you cannot delete registered Firebird server in FBDataGuard web-console. The only way to unregister server is to delete its configuration files. In general, there is no reason for deleting registered server, until you want completely uninstall FBDataGuard. |

3.3.2. Server: Active server

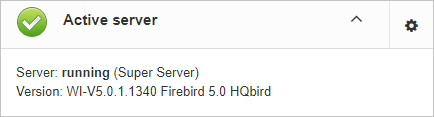

Server: Active server widget shows summarized status of all server-level jobs and statuses of monitored databases.

Server: Active server also indicates Firebird currently running or not and shows detailed version of Firebird and HQbird.

If you click on configure link, you will see the same dialog that we have used to register Firebird instance in FBDataGuard, and now it can be used for changing Firebird instance properties:

In general, there is no need to edit Firebird server details after the registration, until you are not reinstalling Firebird — but in this case we recommend reinstalling HQBird too.

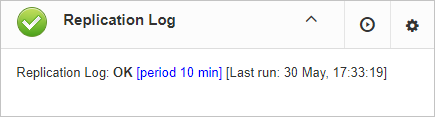

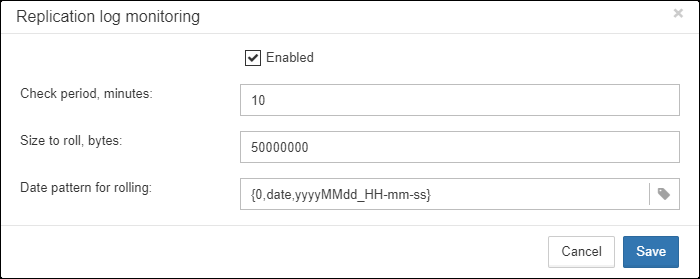

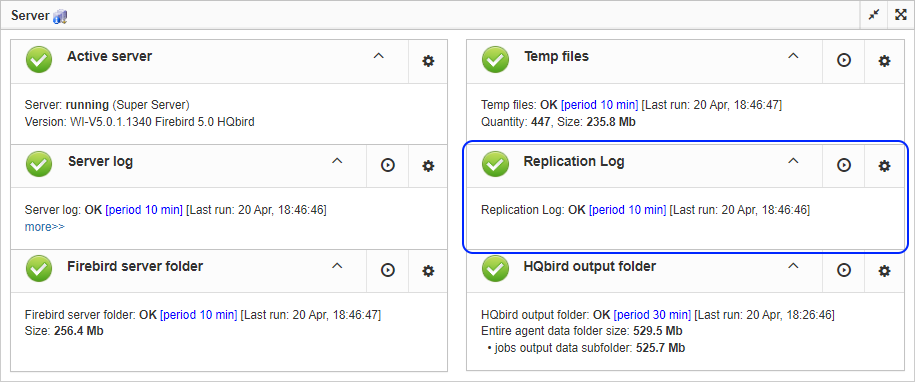

3.3.3. Server: Replication Log

FBDataGuard check replication.log for errors. In case of error it sends an appropriate alert (by email) to the administrator.

To enable this job please check “Enabled”.

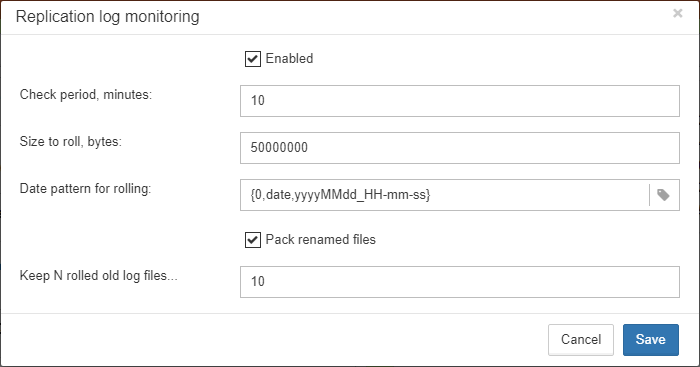

-

“Check period, minutes” — how often to check

replication.logfor changes -

“Size to roll, bytes” — if

replication.logwill exceed will value, it will be renamed according date-time pattern -

“Date pattern for rolling”-- how to rename

replication.log -

“Keep N rolled old log files” — how many errors will be stored in the list of the recent errors.

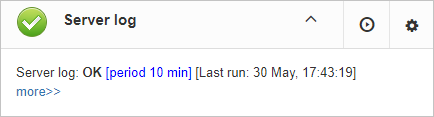

3.3.4. Server: Server log

“Server log” job periodically checks firebird.log and if it detects that file was changed, log analysis starts. The embedded analytic engine checks each entry in firebird.log and categorizes them into several categories with different levels of a severity. According the severity of messages status of job is assigned and appropriate alerts are generated.

Once administrator has reviewed errors and alerts (and performed necessary actions to solve the reason of error), he need to click on “Resolved” link and FBDataGuard will forget old error messages in firebird.log.

In the configuration dialog of “Server log” you can enable/disable this job and set the check period (in minutes).

Also this job watches for the size of firebird.log and if its size exceeds “Size to roll”, FBDataGuard will split firebird.log and rename it according to the date-time pattern.

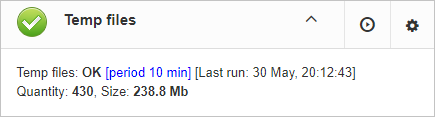

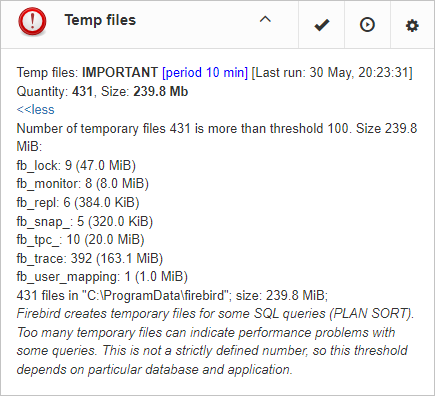

3.3.5. Server: Temp files

“Server: Temp files” job is useful to catch and solve performance problems with Firebird database.

While performing SQL queries Firebird stores intermediate results for sorting and merging data flows in temporary files, which are allocated in specified TEMP locations. FBDataGuard shows at “Server: Temp files” widget information about quantity and size of temporary files.

FBDataGuard recognizes locations of TEMP folders and monitors quantity and size of temporary files. Lack of space can lead to the performance problem or more serious errors, too many (or too large) temporary files can indicate problems with SQL queries quality.

Using configuration dialog you can enable/disable this job, set period and thresholds to the maximum size of temporary files (size of all files) and quantity.

If you see that size of temp files is too high and if there is enough RAM on the server, increase TempCacheLimit parameter in firebird.conf to fit all temporary tables into RAM.

Also, HQbird checks other temp files used by Firebird — if you see extreme values (several Gb) for trace or monitor, the good idea will be check the FIREBIRD_TMP folder for outdated files (with old modification timestamps). Please note — the screenshot below is not a real alert (i.e., values are Ok), it was created to demonstrate the output in case of large temporary files.

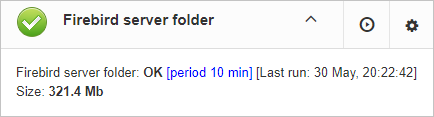

3.3.6. Server: Firebird server folder

“Firebird server folder” jobs monitors size, occupied by Firebird installation. It’s enabled by default.

There are several threats prevented by this job: maladministration issues when database volumes or external tables are being created in %Firebird%\Bin folder, very big firebird.log which can exhaust all places at drive with Firebird installation, and some other problems.

Also this job monitors and analyses information, gathered by all space-related jobs (including database-level jobs). At the picture below you can see quick representation of space analysis for all drives where Firebird, databases and backups are stored.

Using configuration dialog you can enable/disable this job, set period of checking and thresholds for server folder size.

By default, we use 1 Gb is a standard setting for Firebird installation.

If the size of your Firebird is larger, please consider clean-up of old logs and other unwanted artifacts, or increase parameter Max occupied (in bytes) to prevent false alerts.

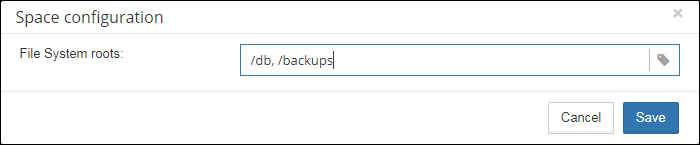

Note for Linux users: if you see red warning regarding the inconsistent space information, add locations with database and backups to Disk Space widget:

You can get idea where is your database and backup is actually located with command df -h.

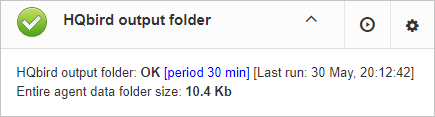

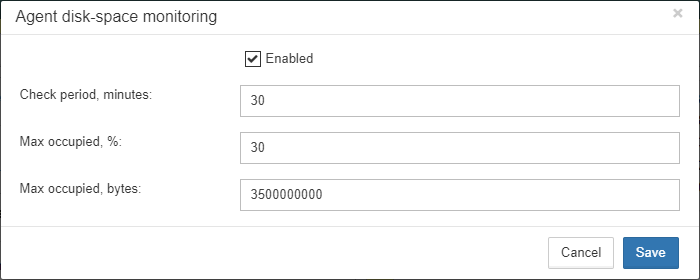

3.3.7. Server: HQbird Output Folder

“HQbird output folder” monitoring is intended to watch space occupied by reports, logs, stats, metadata repository and other data, gathered and generated by HQbird — this folder by default is C:\HQbirdData\output.

For databases unattended for a long time (1-2 years) it is possible that FBDataGuard logs will occupy too much space and lack of space can lead to database outage. To prevent it for sure, “HQbird output folder” is watching for occupied space.

By default, “HQbird output folder” job is enabled.

Also, if someone has ignored recommendations to put backups’ folders to the explicit locations, it is possible that database backup will be created inside Agent folder. In this case you’ll see CRITICAL status immediately — FBDataGuard will recognize and warn you regarding wrong configuration.

And, this job is useful for bundles of FBDataGuard and third-party applications.

In the configuration dialog you can enable/disable this job, set check period (by default it is 10 minutes), and set thresholds for alerts.

Thresholds can be set in % of max size occupied by log or using the explicit size in bytes.

FBDataGuard checks both values and raises alert for the first threshold. If you wish to set % only, you need to set -1 as value to “Max occupied”.

3.4. Database configuration in FBDataGuard

3.4.1. Firebird database registration

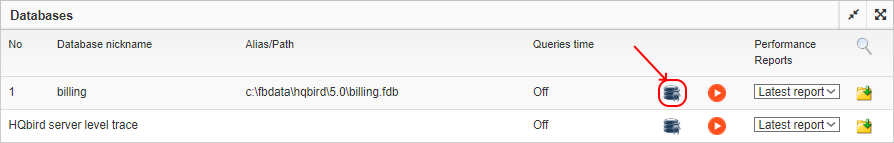

The list of databases monitored by FBDataGuard is in the “Databases” section.

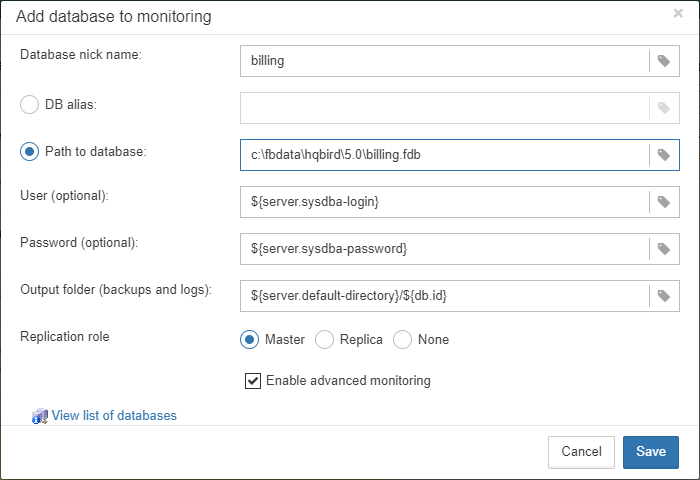

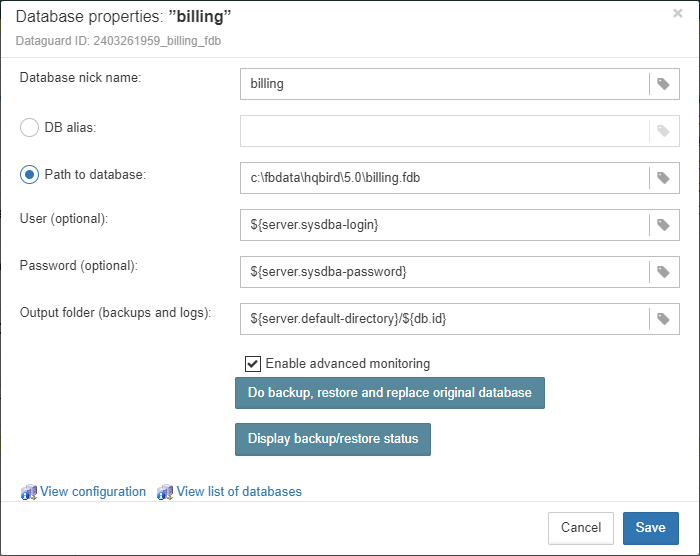

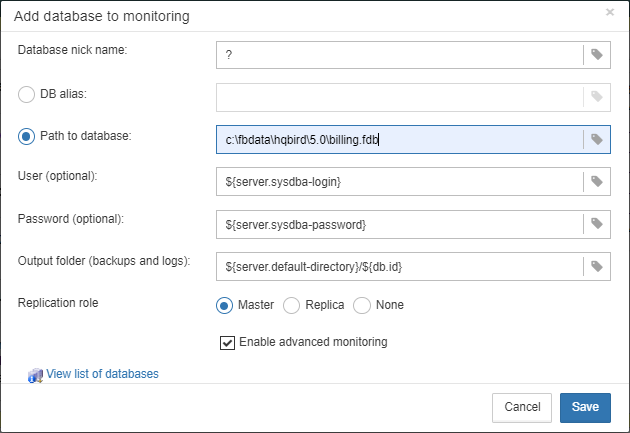

To register database in FBDataGuard, you need to click at the symbol “Plus” in the right corner of “Databases” (there will be a hint “Add database”) and fill the following form:

-

“Database nick name” is for your convenience, it is used to refer this database in alerts and email messages.

-

“DB alias” is a database alias from

aliases.confor indatabases.conf. If you specify both “DB Alias” and “Path to database”, “DB Alias” will be used. -

“Path to database” is the local path to database (remember that FBDataGuard should work at the same computer with Firebird). If you are putting database on external drive, it can raise error “File… has unknown partition”. To fix it you need to click on “Configure” at Server widget and click “Save” to make FBDataGuard re-read partitions.

-

“Output folder (backups and logs)” is the folder where FBDataGuard will store backups, logs and statistics for this database. If you do not select HQbirdData folder during the installation, and if you do not specify output folder for the server, it’s a good idea to specify “Output folder” to some explicit location like

F:\mydatabasedata. -

“Enable advanced monitoring” — see Advanced Monitor Viewer

|

You can specify exact absolute locations for backups and statistics later in appropriate dialogs. |

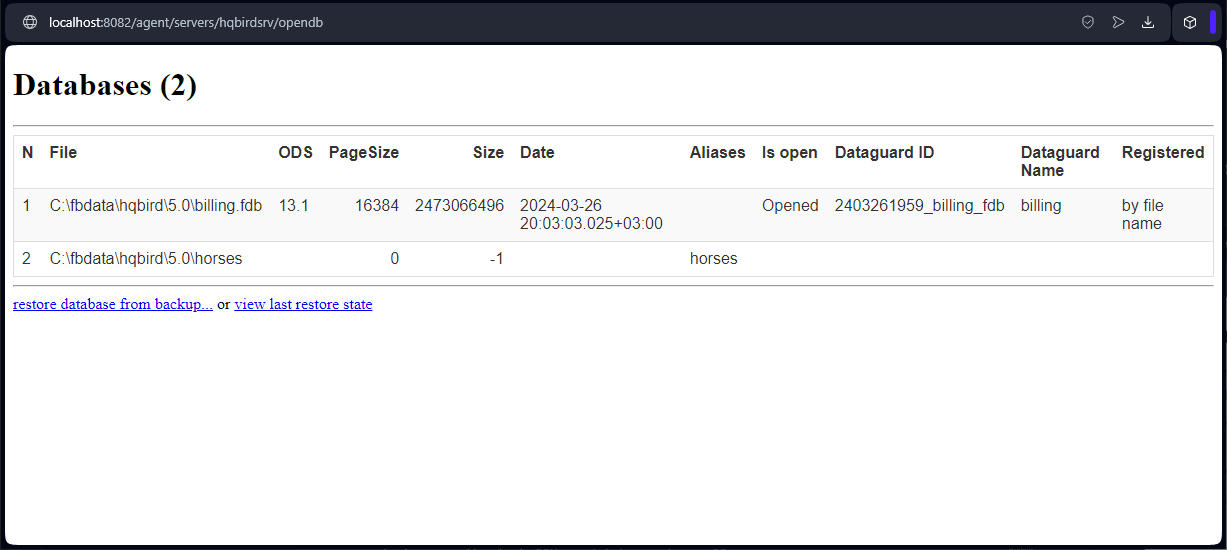

You can see the list of databases available for registration or their aliases by clicking on the link View database aliases.

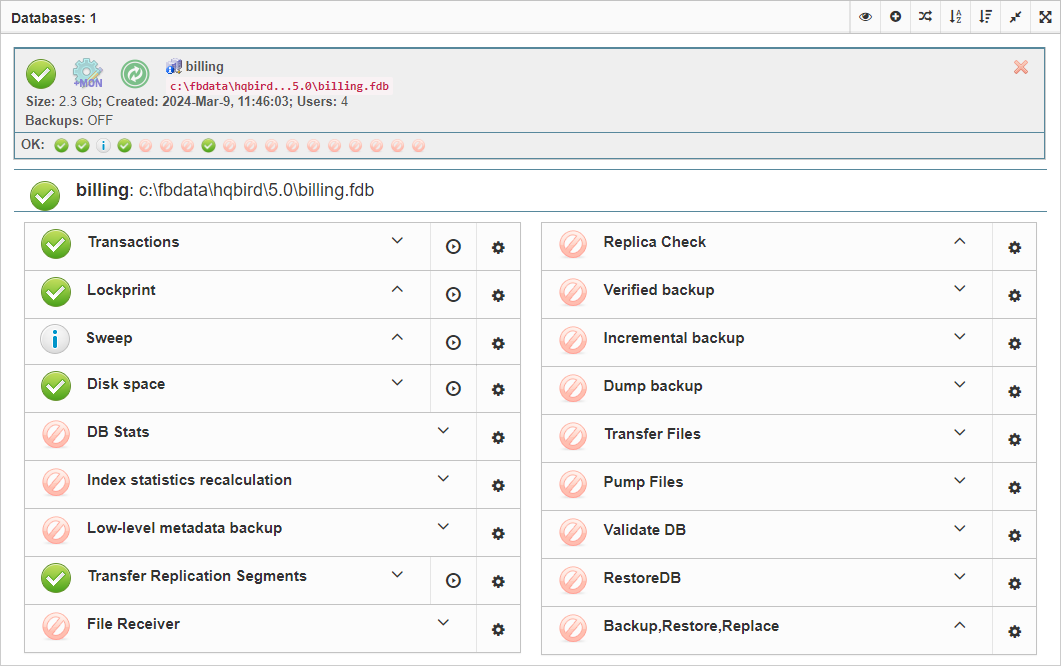

After registration, FBDataGuard will populate database configuration with default values and then show web-console with registered database:

You can adjust database settings later; now let’s proceed with alerts setup.

3.4.2. Database: Configure

FBDataGuard can monitor several databases at the single server (up to 80 databases). For each database the separate widget is created. At the top widget database status is shown, database nickname (it’s specified during database adding and can be changed). Also database widget shows the full path to the database, its size, status of backups and the number of currently connected users.

Using configuration dialog you can set database nickname, path to database and output folder for the database (to store logs and jobs results).

FBDataGuard checks the validity of path to database and it does not allow specifying the wrong path.

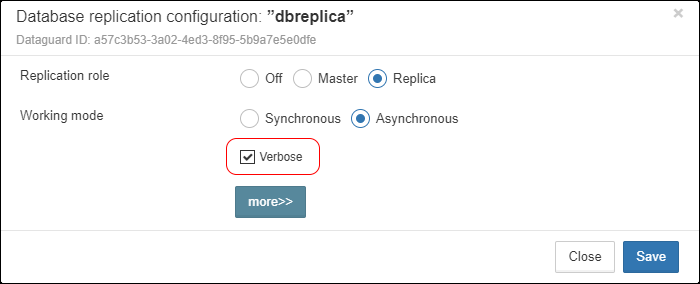

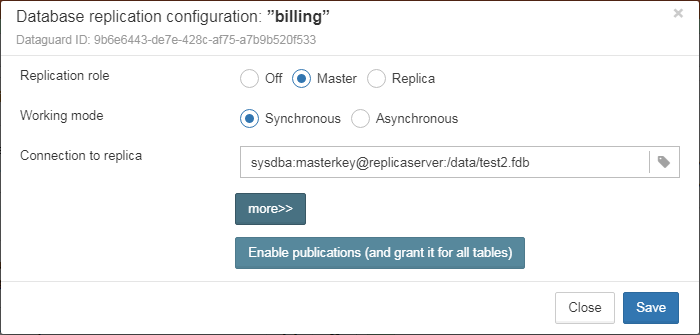

Also, for HQbird Enterprise, in the database widget you can see status of the replication and configure replication by clicking on the icon. Please read more details in the replication configuration section.

Since HQbird 2020, the database widget in HQbird also shows the encryption status of the database.

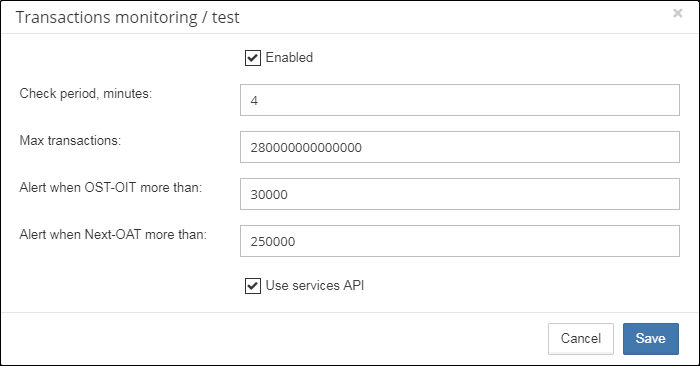

3.4.3. Database: Transactions

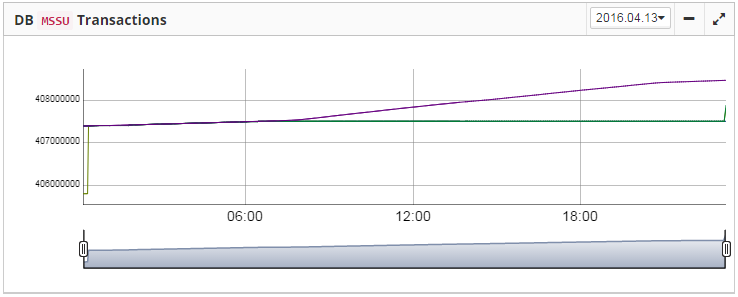

“Database: Transactions” job is intended to log transactions activity. It monitors 2 important intervals: difference between Oldest Active Transaction and Next transaction and gap between Oldest Snapshot and Oldest Interesting.

If these intervals are out of the frames of the specified threshold, it means problem with transactions management.

These logs can be analyzed to get helpful insight regarding database performance and application quality (see more information here http://ib-aid.com/en/articles/ibanalyst-what-you-can-see-at-summary-view/).

This job also monitors the implementation limit in Firebird: maximum transactions number in Firebird versions before 3.0 should be less than 231-1. Near this number database should be backup and restored. It will throw an alert if transaction number will be close to the restrictions.

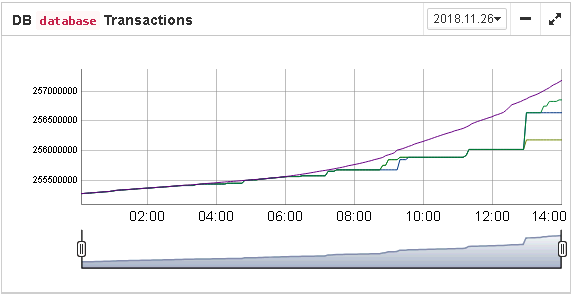

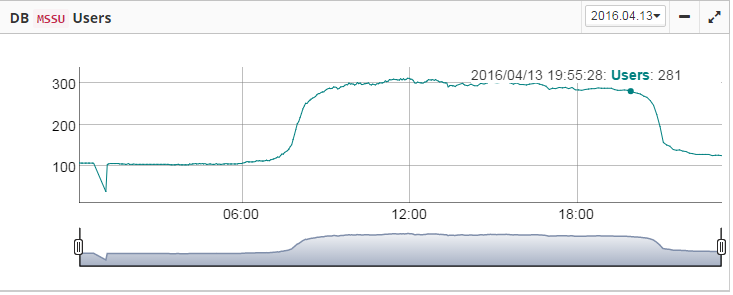

Also, the transaction dynamics is shown on the tab “Graphs gallery”:

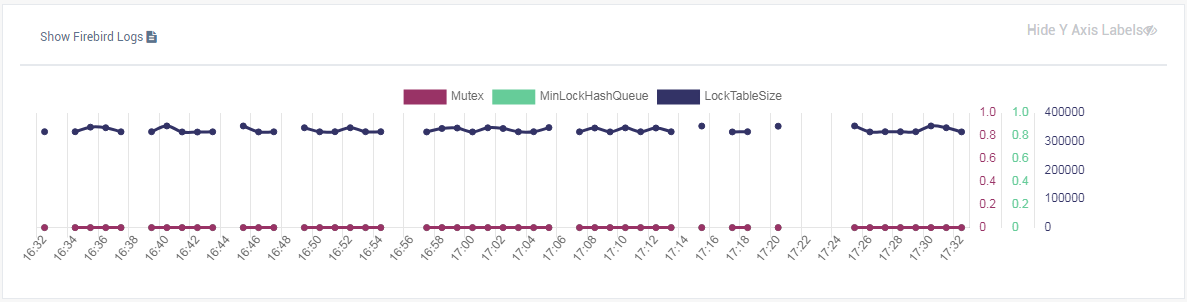

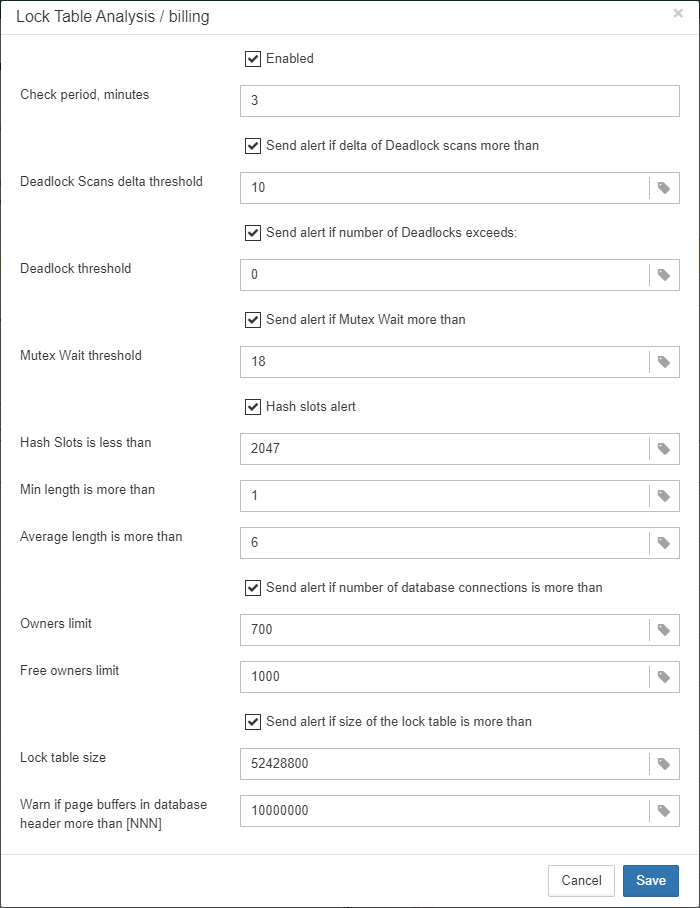

3.4.4. Database: Lockprint

“Lockprint” job monitors the information from the lock table of Firebird. It is very important for architectures Classic/SuperClassic and useful for SuperServer.

The lock table is an internal Firebird mechanism to organize access to the objects inside Firebird engine. HQbird monitors the important parameters of the lock table:

-

Check period, minutes — how often HQbird analyses lock table. 3 minutes is an optimal interval.

-

Deadlock Scans delta threshold — deadlock scan is a process, started by Firebird engine, in case of a long response delay from the one of the threads. If a number of deadlock scans is high, it means that Firebird is heavily loaded. The value is accumulated since the Firebird engine start. The default value is pretty big – 12345, so if it is exceeded, it means that database performance is poor.

-

Deadlock threshold — if Firebird engine finds the true deadlock during the deadlock scans, it increases this value. Please note: true deadlocks are very seldom. Don’t confuse them with transactions' conflicts (“deadlock. Lock conflict on nowait transaction” etc).

-

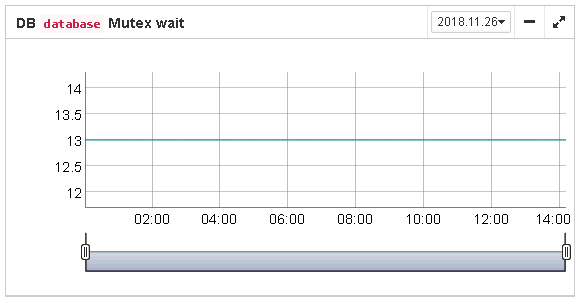

Mutex Wait threshold — Mutex Wait is a parameter of lock table which implicitly indicates the conflicts for the resources. The higher mutex wait, the more competition exists inside the engine for the resources. By default, the mutex wait threshold is set to 18%, but this value is not universal for all databases. The good approach is to watch for the mutex values during 1-2 weeks and then set the highest value seen during this period. Mutex wait graph is available in Mutex Wait gallery.

-

Hash slots alerts. Lock table header has a parameter “Hash lengths (min/avg/max): 0/0/4”, it shows the lengths in the lock table. It is important to keep these values as low as possible, so HQbird monitors them and suggest, how to improve the situation, if hash length is more than specified in this job.

-

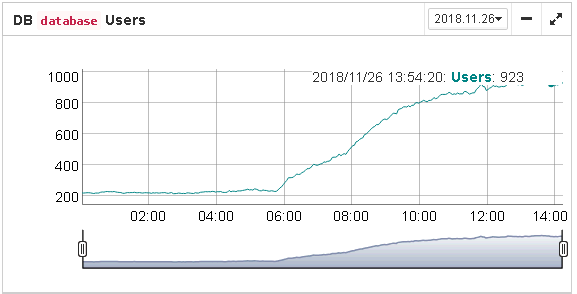

Owners limit. “Owners” is a number of connections established to the specified database. In fact, this is the fastest way to get the actual number of connections to the database with the minimum load to the database — other ways like request to

MON$ATTACHMENTSorisc_tpb_databasehave various disadvantages. The limit here should be set according the actual peak number of connections. For example, if you are sure that peak number of the connections to your database is 500, set 550 as Owners limit, and if at some moment the load will increase, you will not miss that moment.

-

Free owners limit. “Free owners” is the value between the peak number of owners and current number of owners. If you see

Free owners = 0, it means that number of connections grows steadily since the Firebird start. If you see high number of Free owners, it can be sign that many connections were disconnected recently. -

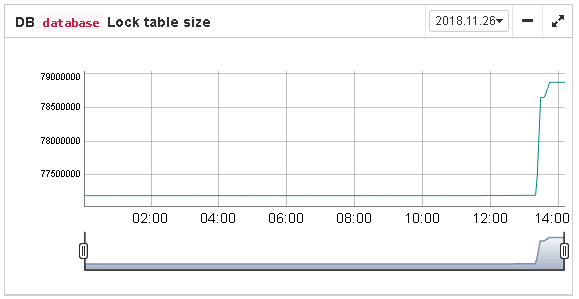

Lock table size. The lock table size is an implicit indicator of the load to the system. Normally, lock table size should be stable. Also, it is recommended to set the initial lock table size to the value it has after some active work period — though the lock table is enlarged on demand, the re-allocation process is a heavy operation and can lead to micro-freezes in database responses. Lock table graph is useful to determine the proper initial value.

-

Lock table queue. Lock table queue does not have the explicit threshold in Lockprint job, but its values are collected and shown in “Graphs gallery”. Lock table queue is an indicator of a general load.

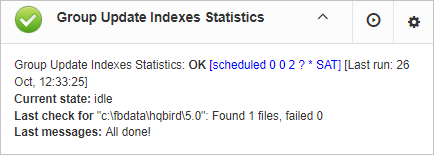

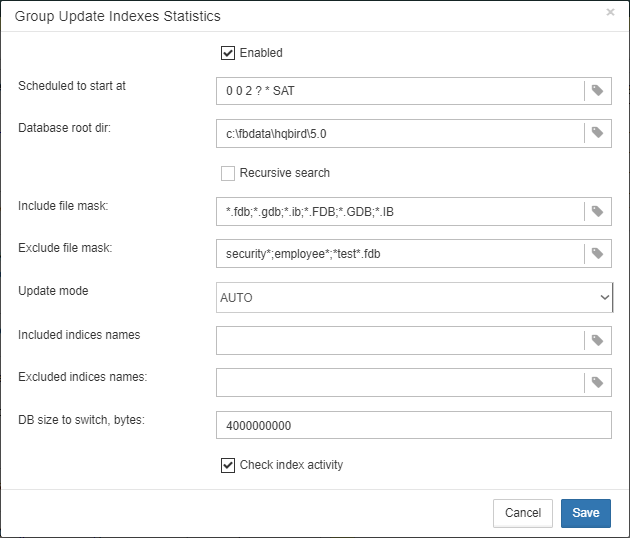

3.4.5. Database: Index statistics recalculation

“Database: Index statistics recalculation” is an important job which helps to keep performance of indices at optimal level, and performs additional checking of a database health.

“Database: Index statistics recalculation” allows to run re-computing of indices selectivity values. During this procedure Firebird quickly walks through leaf pages of indices, and renews statistics about selectivity. By visiting these pages Firebird also verifies their integrity and if index is corrupted, the warning will be thrown.

Also, this job verifies that all indices are active in database. Inactive or non-activated indices usually indicate corruption and lead to performance degradation.

By default this job is disabled, but we recommend enabling it after careful selecting of indices for the recalculation.

There are three modes in this job: AUTO, ALL, SELECTED.

ALL is the mode where all indices will be checked.

AUTO is the default mode. It is very similar to ALL, but it also checks the size of database and do not touch indices if database is bigger than 3.6Gb.

SELECTED is the recommended mode. It allows choosing of indices which should be recomputed or those which should be avoided.

To include indices into the list of recomputed, you need to specify indices names (divided by comma), and to exclude – perform the same in the appropriate field.

As you can see at configuration dialog screenshot, there are fields to enable/disable job, to set update mode, and to include or exclude indices. “DB size to switch, bytes” is to set limit where AUTO mode is working. “Check index activity” switch should be always on, until you are not performing special manipulations with inactive indices.

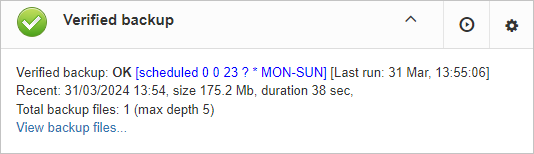

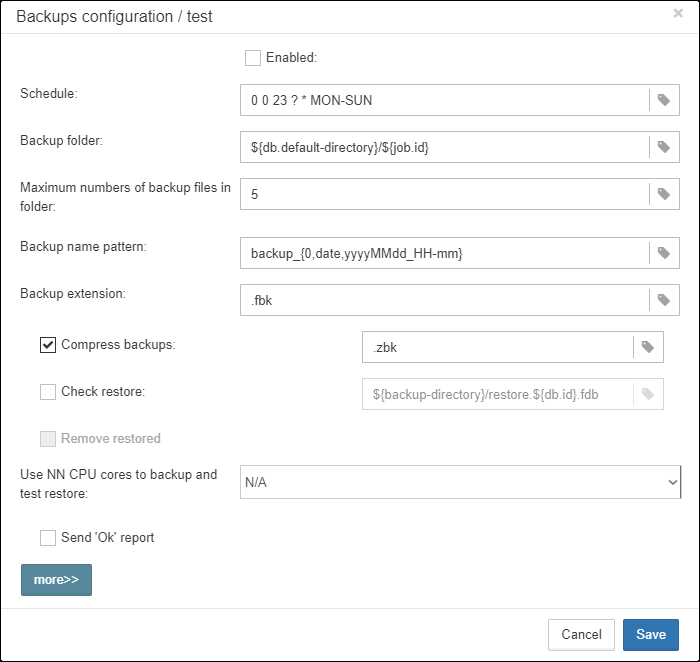

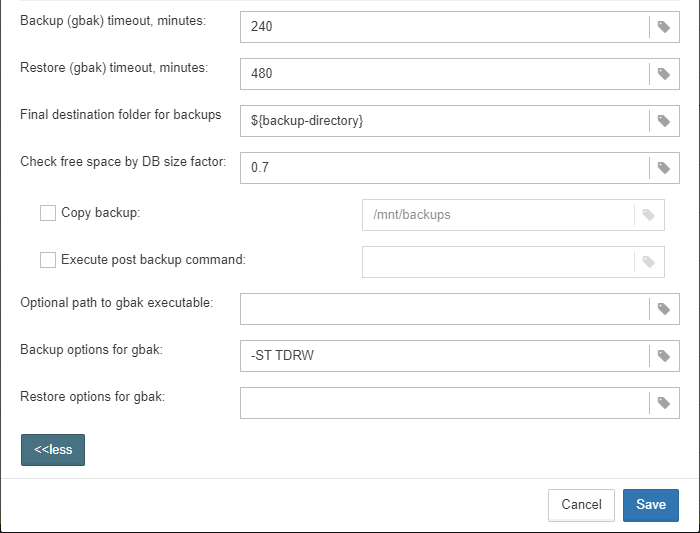

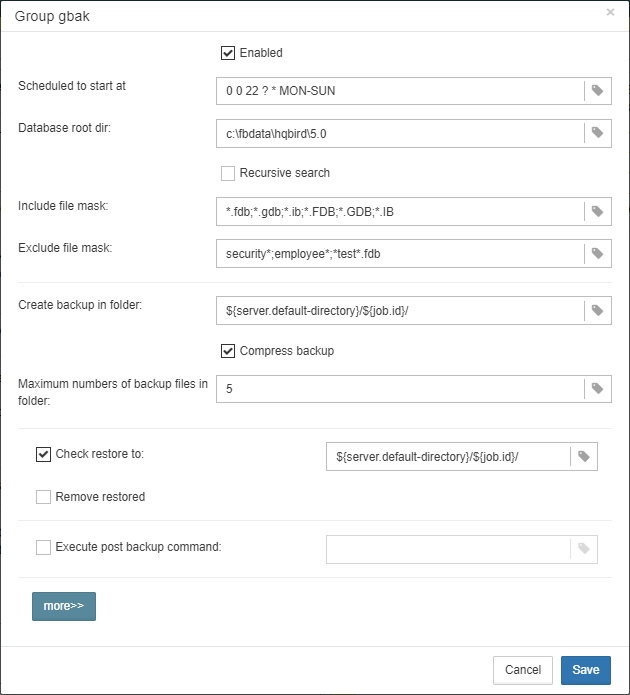

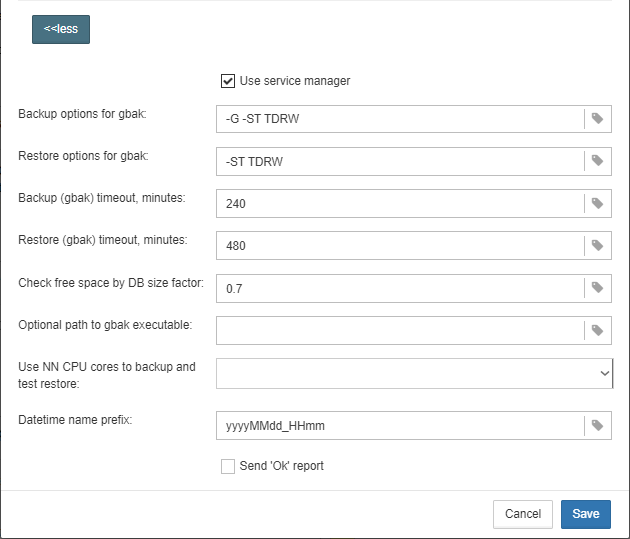

3.4.6. Database: Verified Backup

“Database: Verified Backup” is one of the key jobs to guarantee the safety of data stored in the protected database. During the development of HQbird we had in mind certain recovery scenario, and this scenario implies that the key goal of database protection is to minimize potential losses of data. If we have healthy backup, recovery can be concentrated on saving the most recent data (just entered into the database), and it greatly decreases the time of overall outage.

As you will see below, “Database: Verified Backup” is not just a wrapper for standard gbak functionality and scheduler, this is a smart job which has many built-in rules to prevent problems with backups and provide suitable interface for backups management.

|

“Database: Verified Backup” is disabled by default, but we strongly recommend reviewing of its settings immediately after HQbird setup. |

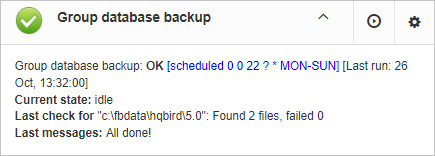

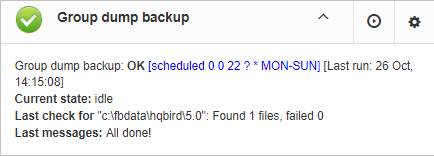

Initially “Database: Verified Backup” job is shown as Ok, though backup was not tried. In this case OK means that backup at least scheduled.

Also this job recognizes files according the name pattern (see below information regarding configuration), and shows the totals number of backups.

After the backup will be done, the widget information will be changed: creation time of last successful backup will be shown, and also the time took to actually perform the backup (only 1 minute 12 seconds at the screenshot with example).

Also, the detailed alert will be send to your email and/or HQbird Control Center:

“Database: Verified Backup” checks the free space at the drive with backup destination, and if it detects that there is not enough free disk space, CRITICAL alert will be sent, and current backup will be canceled (if necessary).

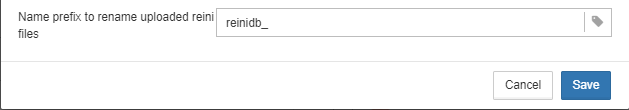

|